[ad_1]

Could you do your job without a computer? As a child in the 1970s, I was told that computers would take all of our jobs. Yet here I am, working in a career that wouldn’t exist without computers. Most modern jobs require computers for emails, report writing, or videoconferences. Rather than replacing our jobs, computers have created new jobs and made existing jobs more human-centric, as we delegate tedious mechanistic tasks to machines.

I love watching the movie Hidden Figures, showcasing the social and technological revolutions of the 1960s. During the movie’s early moments, we are introduced to three computers: Mary Jackson, Katherine Johnson, and Dorothy Vaughan. In contrast to the popular science fiction theme of human-like robots, these computers were natural humans. Back then, “computer” was a job title. Towards the end of the movie, we see NASA install its first machine computer. But there was a twist to the story – the human computers adapted and taught themselves to become highly valued programmers of the new machines.

AI Hype Versus Narrow AI

The topic of artificial intelligence can be very divisive. Some people think that AI is going to help us to solve new problems. There’s another school of thought that believes that AI might one day turn against us. This more pessimistic school of thought is based upon the premise that computers will become more intelligent than humans, making us obsolete and maybe even becoming our masters.

As far back as 1965, Irving Good, a British mathematician who worked as a cryptologist at Bletchley Park with Alan Turing, speculated:

“Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion,’ and the intelligence of man would be left far behind… Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control. It is curious that this point is made so seldom outside of science fiction. It is sometimes worthwhile to take science fiction seriously.”

The singularity is a hypothetical point in time when technological growth becomes uncontrollable and irreversible, resulting in unforeseeable changes to human civilization. In one version of this narrative, an AI will enter a “runaway reaction” of self-improvement. Each new and more intelligent generation of AI will appear more and more rapidly, resulting in a powerful superintelligence that far surpasses all human intelligence.

But we are nowhere near ultraintelligence. In the present moment, we have narrow AI. You can train an AI to do one narrowly defined task under certain controlled circumstances. It has no common sense. It has no general knowledge. It has no idea of right and wrong. It does not know when it’s getting things wrong. It just knows what it was taught. It’s a bit like we design a sausage machine. It’s perfectly okay as long as you’re making sausages with it. You try it out on anything else, and you’re just going to make a mess. But the beauty of narrow AI is that many of those tasks are things we were wasting human talent on. For example, I’m old enough to remember when we were not allowed to use calculators in high school. Calculators were going to destroy our ability to do mathematics. Teachers taught us to use log tables. It’s a wonder that all these manual calculations didn’t turn us away from maths forever!

Humans have general intelligence, the ability to do many diverse tasks. However, narrow AI cannot multitask. Organizations need dozens, if not hundreds, of narrow AIs, each doing a single task and contributing to the whole. Even a single use case could require multiple AIs. For example, even a customer churn reduction use case could have several AIs. One could predict which customers are likely to churn. Another AI could predict which customers could change their minds if the customer retention team took action. A third AI could choose the optimal action to take to retain each customer. A fourth AI could predict which customers are worth keeping, i.e., which customers will be profitable in the future.

There are three reasons why we don’t have ultra-intelligent AI.

- Computing power limitations: The complexity of AI models is growing faster than improvements in computer hardware. For example, state-of-the-art language models are increasing in size by at least a factor of ten every year. Yet, according to Moore’s Law, computing power doubles every two years.

- Cost limitations: The cost of training and running AI models increases with their complexity and size. It is prohibitively expensive to train complex AI models. For example, training a 175-billion-parameter neural network requires 3.114e23 FLOPS (floating-point operation), which would theoretically take 355 years on a V100 GPU server with 28 TFLOPS capacity and cost $4.6 million at $1.5 per hour. In addition to the monetary cost is the environmental cost. Researchers estimated the carbon footprint of training OpenAI’s giant GPT-3 text-generating model is similar to driving a gasoline-engined car to the Moon and back. Even if it were technically possible, using current technology to replicate human intelligence would take up more than the world’s entire energy budget.

- Technical limitations: The current generation of AI is powered by machine learning, a form of pattern recognition. Narrow AI doesn’t understand what it is saying or doing. It is merely following patterns found in data. AI systems still make simple errors that any human can sport. For example, when asked which is heavier, a toaster or a pencil, GPT-3 declared that a pencil is heavier than a toaster. Sam Altman, a leader at OpenAI, tweeted about his AI model GPT-3, “The GPT-3 hype is way too much. It’s impressive (thanks for the nice compliments!), but it still has serious weaknesses and sometimes makes very silly mistakes. AI is going to change the world, but GPT-3 is just a very early glimpse. We have a lot still to figure out.”

To move AI forward, we need to find a fundamentally different approach, and at the moment, we don’t have any promising ideas of how to do that.

Comparative Advantage

The critical reason that AI won’t simply replace humans is the well-known economic principle of comparative advantage. David Ricardo developed the classical theory of comparative advantage in 1817 to explain why countries engage in international trade even when one country’s workers are more efficient at producing every single good than workers in other countries. It isn’t the absolute cost or efficiency that determines which country supplies which goods or services. Optimal production allocation follows the relative strengths or advantages of producing each good or service within each country and the opportunity cost of not specializing in your strengths. The same principle applies to humans and computers.

Computers are best at repetitive tasks, mathematics, data manipulation, and parallel processing. These comparative strengths are what propelled the Third Industrial Revolution, which gave us today’s digital technology. Many of our business processes already take advantage of these strengths. Banks have massive computer systems that handle transactions in real-time. Marketers use customer relationship management software to store information about millions of customers. If a task is repetitive, frequent, has a predictable outcome, and you have data to reach that outcome, automate that workflow.

Humans are strongest at communication and engagement, context and general knowledge, common sense, creativity, and empathy. Humans are inherently social creatures. Research shows that customers prefer to deal with humans, especially for issues that generate emotion, such as when they experience a problem and want help solving it.

Jobs Versus Tasks

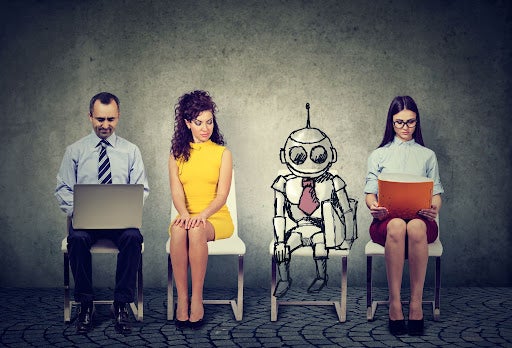

The key reason that an AI will not take your job is that AIs can’t do jobs.

A job is not the same thing as a task. Jobs require multiple tasks. A narrow AI can do a single well-defined task, but it cannot do a job. Thankfully, the tasks that narrow AI is best at are also the mundane and repetitive inhuman tasks that humans least like doing.

The future of work will be transformed task-by-task, not job-by-job. By analyzing which tasks will be automated or augmented, organizations can determine how each job will be affected. Rather than replacing employees, a successful organization will redesign jobs to be human-centric rather than process-centric.

The future isn’t AI versus humans – it is AI-augmented humans doing what humans are best at.

Humans and AI Best Practices

Fearful employees can be blockers to organizational change. Human-centric job redesign can be a win/win situation for employers and employees.

Since narrow AI does tasks, AI use case selection and prioritization is vital to success. With few people having experience in AI transformation, find a partner who will design an AI success plan. They can host use case ideation workshops and educate your employees. The goal is for your organization to become self-sufficient, in control of its AI destiny.

You need to manage all of the AIs deployed across your organization. But with dozens or hundreds of narrow AI models in production, ad-hoc AI governance can rapidly become overwhelmingly complex. Modern MLOps systems and practices can tame this complexity, empowering and augmenting human employees to practically manage your AI ecosystem.

About the author

VP, AI Strategy, DataRobot

Colin Priest is the VP of AI Strategy for DataRobot, where he advises businesses on how to build business cases and successfully manage data science projects. Colin has held a number of CEO and general management roles, where he has championed data science initiatives in financial services, healthcare, security, oil and gas, government and marketing. Colin is a firm believer in data-based decision making and applying automation to improve customer experience. He is passionate about the science of healthcare and does pro-bono work to support cancer research.

Meet Colin Priest

[ad_2]

Source link