[ad_1]

Mixture of experts is an ensemble learning technique developed in the field of neural networks.

It involves decomposing predictive modeling tasks into sub-tasks, training an expert model on each, developing a gating model that learns which expert to trust based on the input to be predicted, and combines the predictions.

Although the technique was initially described using neural network experts and gating models, it can be generalized to use models of any type. As such, it shows a strong similarity to stacked generalization and belongs to the class of ensemble learning methods referred to as meta-learning.

In this tutorial, you will discover the mixture of experts approach to ensemble learning.

After completing this tutorial, you will know:

- An intuitive approach to ensemble learning involves dividing a task into subtasks and developing an expert on each subtask.

- Mixture of experts is an ensemble learning method that seeks to explicitly address a predictive modeling problem in terms of subtasks using expert models.

- The divide and conquer approach is related to the construction of decision trees, and the meta-learner approach is related to the stacked generalization ensemble method.

Kick-start your project with my new book Ensemble Learning Algorithms With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

A Gentle Introduction to Mixture of Experts Ensembles

Photo by Radek Kucharski, some rights reserved.

Tutorial Overview

This tutorial is divided into three parts; they are:

- Subtasks and Experts

- Mixture of Experts

- Subtasks

- Expert Models

- Gating Model

- Pooling Method

- Relationship With Other Techniques

- Mixture of Experts and Decision Trees

- Mixture of Experts and Stacking

Subtasks and Experts

Some predictive modeling tasks are remarkably complex, although they may be suited to a natural division into subtasks.

For example, consider a one-dimensional function that has a complex shape like an S in two dimensions. We could attempt to devise a model that models the function completely, but if we know the functional form, the S-shape, we could also divide up the problem into three parts: the curve at the top, the curve at the bottom and the line connecting the curves.

This is a divide and conquer approach to problem-solving and underlies many automated approaches to predictive modeling, as well as problem-solving more broadly.

This approach can also be explored as the basis for developing an ensemble learning method.

For example, we can divide the input feature space into subspaces based on some domain knowledge of the problem. A model can then be trained on each subspace of the problem, being in effect an expert on the specific subproblem. A model then learns which expert to call upon to predict new examples in the future.

The subproblems may or may not overlap, and experts from similar or related subproblems may be able to contribute to the examples that are technically outside of their expertise.

This approach to ensemble learning underlies a technique referred to as a mixture of experts.

Mixture of Experts

Mixture of experts, MoE or ME for short, is an ensemble learning technique that implements the idea of training experts on subtasks of a predictive modeling problem.

In the neural network community, several researchers have examined the decomposition methodology. […] Mixture–of–Experts (ME) methodology that decomposes the input space, such that each expert examines a different part of the space. […] A gating network is responsible for combining the various experts.

— Page 73, Pattern Classification Using Ensemble Methods, 2010.

There are four elements to the approach, they are:

- Division of a task into subtasks.

- Develop an expert for each subtask.

- Use a gating model to decide which expert to use.

- Pool predictions and gating model output to make a prediction.

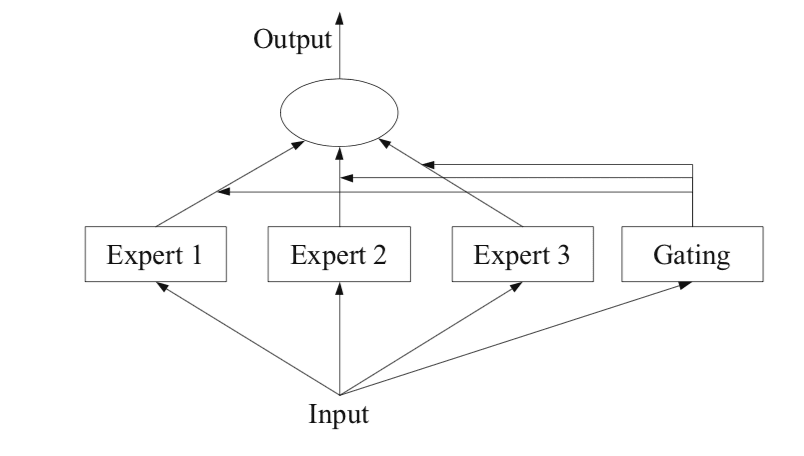

The figure below, taken from Page 94 of the 2012 book “Ensemble Methods,” provides a helpful overview of the architectural elements of the method.

Example of a Mixture of Experts Model with Expert Members and a Gating Network

Taken from: Ensemble Methods

Subtasks

The first step is to divide the predictive modeling problem into subtasks. This often involves using domain knowledge. For example, an image could be divided into separate elements such as background, foreground, objects, colors, lines, and so on.

… ME works in a divide-and-conquer strategy where a complex task is broken up into several simpler and smaller subtasks, and individual learners (called experts) are trained for different subtasks.

— Page 94, Ensemble Methods, 2012.

For those problems where the division of the task into subtasks is not obvious, a simpler and more generic approach could be used. For example, one could imagine an approach that divides the input feature space by groups of columns or separates examples in the feature space based on distance measures, inliers, and outliers for a standard distribution, and much more.

… in ME, a key problem is how to find the natural division of the task and then derive the overall solution from sub-solutions.

— Page 94, Ensemble Methods, 2012.

Expert Models

Next, an expert is designed for each subtask.

The mixture of experts approach was initially developed and explored within the field of artificial neural networks, so traditionally, experts themselves are neural network models used to predict a numerical value in the case of regression or a class label in the case of classification.

It should be clear that we can “plug in” any model for the expert. For example, we can use neural networks to represent both the gating functions and the experts. The result is known as a mixture density network.

— Page 344, Machine Learning: A Probabilistic Perspective, 2012.

Experts each receive the same input pattern (row) and make a prediction.

Gating Model

A model is used to interpret the predictions made by each expert and to aid in deciding which expert to trust for a given input. This is called the gating model, or the gating network, given that it is traditionally a neural network model.

The gating network takes as input the input pattern that was provided to the expert models and outputs the contribution that each expert should have in making a prediction for the input.

… the weights determined by the gating network are dynamically assigned based on the given input, as the MoE effectively learns which portion of the feature space is learned by each ensemble member

— Page 16, Ensemble Machine Learning, 2012.

The gating network is key to the approach and effectively the model learns to choose the type subtask for a given input and, in turn, the expert to trust to make a strong prediction.

Mixture-of-experts can also be seen as a classifier selection algorithm, where individual classifiers are trained to become experts in some portion of the feature space.

— Page 16, Ensemble Machine Learning, 2012.

When neural network models are used, the gating network and the experts are trained together such that the gating network learns when to trust each expert to make a prediction. This training procedure was traditionally implemented using expectation maximization (EM). The gating network might have a softmax output that gives a probability-like confidence score for each expert.

In general, the training procedure tries to achieve two goals: for given experts, to find the optimal gating function; for a given gating function, to train the experts on the distribution specified by the gating function.

— Page 95, Ensemble Machine Learning, 2012.

Pooling Method

Finally, the mixture of expert models must make a prediction, and this is achieved using a pooling or aggregation mechanism. This might be as simple as selecting the expert with the largest output or confidence provided by the gating network.

Alternatively, a weighted sum prediction could be made that explicitly combines the predictions made by each expert and the confidence estimated by the gating network. You might imagine other approaches to making effective use of the predictions and gating network output.

The pooling/combining system may then choose a single classifier with the highest weight, or calculate a weighted sum of the classifier outputs for each class, and pick the class that receives the highest weighted sum.

— Page 16, Ensemble Machine Learning, 2012.

Want to Get Started With Ensemble Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Download Your FREE Mini-Course

Relationship With Other Techniques

The mixture of experts method is less popular today, perhaps because it was described in the field of neural networks.

Nevertheless, more than 25 years of advancements and exploration of the technique have occurred and you can see a great summary in the 2012 paper “Twenty Years of Mixture of Experts.”

Importantly, I’d recommend considering the broader intent of the technique and explore how you might use it on your own predictive modeling problems.

For example:

- Are there obvious or systematic ways that you can divide your predictive modeling problem into subtasks?

- Are there specialized methods that you can train on each subtask?

- Consider developing a model that predicts the confidence of each expert model.

Mixture of Experts and Decision Trees

We can also see a relationship between a mixture of experts to Classification And Regression Trees, often referred to as CART.

Decision trees are fit using a divide and conquer approach to the feature space. Each split is chosen as a constant value for an input feature and each sub-tree can be considered a sub-model.

Mixture of experts was mostly studied in the neural networks community. In this thread, researchers generally consider a divide-and-conquer strategy, try to learn a mixture of parametric models jointly and use combining rules to get an overall solution.

— Page 16, Ensemble Methods, 2012.

We could take a similar recursive decomposition approach to decomposing the predictive modeling task into subproblems when designing the mixture of experts. This is generally referred to as a hierarchical mixture of experts.

The hierarchical mixtures of experts (HME) procedure can be viewed as a variant of tree-based methods. The main difference is that the tree splits are not hard decisions but rather soft probabilistic ones.

— Page 329, The Elements of Statistical Learning, 2016.

Unlike decision trees, the division of the task into subtasks is often explicit and top-down. Also, unlike a decision tree, the mixture of experts attempts to survey all of the expert submodels rather than a single model.

There are other differences between HMEs and the CART implementation of trees. In an HME, a linear (or logistic regression) model is fit in each terminal node, instead of a constant as in CART. The splits can be multiway, not just binary, and the splits are probabilistic functions of a linear combination of inputs, rather than a single input as in the standard use of CART.

— Page 329, The Elements of Statistical Learning, 2016.

Nevertheless, these differences might inspire variations on the approach for a given predictive modeling problem.

For example:

- Consider automatic or general approaches to dividing the feature space or problem into subtasks to help to broaden the suitability of the method.

- Consider exploring both combination methods that trust the best expert, as well as methods that seek a weighted consensus across experts.

Mixture of Experts and Stacking

The application of the technique does not have to be limited to neural network models and a range of standard machine learning techniques can be used in place seeking a similar end.

In this way, the mixture of experts method belongs to a broader class of ensemble learning methods that would also include stacked generalization, known as stacking. Like a mixture of experts, stacking trains a diverse ensemble of machine learning models and then learns a higher-order model to best combine the predictions.

We might refer to this class of ensemble learning methods as meta-learning models. That is models that attempt to learn from the output or learn how to best combine the output of other lower-level models.

Meta-learning is a process of learning from learners (classifiers). […] In order to induce a meta classifier, first the base classifiers are trained (stage one), and then the Meta classifier (second stage).

— Page 82, Pattern Classification Using Ensemble Methods, 2010.

Unlike a mixture of experts, stacking models are often all fit on the same training dataset, e.g. no decomposition of the task into subtasks. And also unlike a mixture of experts, the higher-level model that combines the predictions from the lower-level models typically does not receive the input pattern provided to the lower-level models and instead takes as input the predictions from each lower-level model.

Meta-learning methods are best suited for cases in which certain classifiers consistently correctly classify, or consistently misclassify, certain instances.

— Page 82, Pattern Classification Using Ensemble Methods, 2010.

Nevertheless, there is no reason why hybrid stacking and mixture of expert models cannot be developed that may perform better than either approach in isolation on a given predictive modeling problem.

For example:

- Consider treating the lower-level models in stacking as experts trained on different perspectives of the training data. Perhaps this could involve using a softer approach to decomposing the problem into subproblems where different data transforms or feature selection methods are used for each model.

- Consider providing the input pattern to the meta model in stacking in an effort to make the weighting or contribution of lower-level models conditional on the specific context of the prediction.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Papers

Books

Articles

Summary

In this tutorial, you discovered mixture of experts approach to ensemble learning.

Specifically, you learned:

- An intuitive approach to ensemble learning involves dividing a task into subtasks and developing an expert on each subtask.

- Mixture of experts is an ensemble learning method that seeks to explicitly address a predictive modeling problem in terms of subtasks using expert models.

- The divide and conquer approach is related to the construction of decision trees, and the meta-learner approach is related to the stacked generalization ensemble method.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

[ad_2]

Source link