[ad_1]

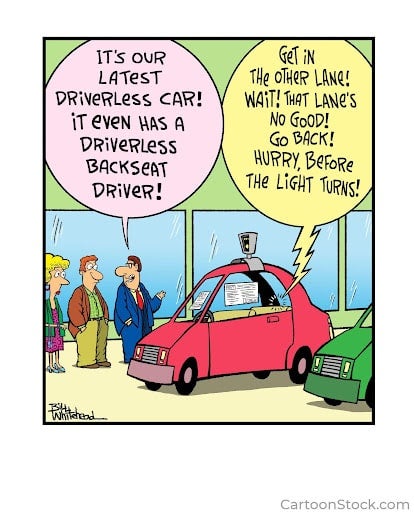

I have a confession to make—I’m a back-seat driver! When sitting in a taxi, I can’t help but grumble when the ride isn’t smooth, or the driver chooses the slowest lane of traffic. I have to fight the urge to take control.

When it comes to shopping, I passively accept what is offered for sale. But my wife, who grew up in Asia where haggling is part of the culture, is different. She loves to haggle, asking for a different color, a price discount, or a free delivery. Despite our apparent differences, my wife and I have something in common. We both want to influence the outcome of a process that affects us, and the research shows that this is common consumer behavior.

How will the need for control affect the success of digital transformation and organizational change? Will consumer behavior change when inflexible data-driven AI systems are the norm? Or should AI systems be adapted to work around human behavior?

Haggling

In classical economics, it is assumed that consumers are homo economicus, a theoretical person who makes purely rational decisions to maximize their monetary gains. Therefore, it was assumed that consumers’ primary motivation to haggle is to obtain a better dollar value for their purchases.

In the late twentieth century, behavioral scientists began challenging the assumptions of economists. They designed experiments that tested real-life human behaviors against those expected from economic theory.

In one such study, Noneconomic Motivations for Price Haggling, examined these motives for consumers who had recently engaged in price bargaining. They concluded that people were fulfilling three primary needs when haggling:

- achievement,

- dominance, and

- affiliation.

The need for achievement occurs when individuals want to accomplish difficult tasks, overcome obstacles, attain high personal standards, and surpass others. Some people have a need to do everything well. Bargaining gives them feelings of competence and mastery.

The need for dominance is a need for power. Some people need to control their environment and influence outcomes. Other people enjoy haggling as competition against others. It is a win when they change the outcome.

Finally, the need for affiliation is a need for friendship, acceptance, and belonging. For some people, bargaining is a social activity to enjoy with friends and family. Others enjoy the storytelling when they recall a haggling result to others.

In the bestselling marketing textbook Consumer Behavior, the authors Schiffman and Kanuk conclude “Many individuals experience increased self-esteem when they exercise power over objects or people.” Since some consumers receive noneconomic benefits from bargaining, organizations should think twice before implementing rigid processes where staff and customers have no control over the outcomes.

Algorithm Aversion

Haggling isn’t the only way that humans don’t behave the way that economic theory suggests.

There is a wealth of research showing again and again that evidence-based algorithms are more accurate than forecasts made by humans. Yet decision makers often shun algorithms, opting instead for the less accurate judgments of humans.

Research has shown that people react more negatively to incorrect decisions when they are made by an AI versus a human decision. They set high, almost perfectionist expectations for AI. People are much more likely to choose to use human rather than algorithmic forecasts once they have seen an algorithm perform and learned it is imperfect.

Our failure to use an imperfect algorithm persists even when we have seen the algorithm outperform humans and accepted that the algorithm is outperforming humans on average. The reluctance of people to use superior algorithms that they know to be imperfect is called algorithm aversion.

Since many real-world outcomes are far from perfectly predictable, even the best algorithms are not perfect and will trigger algorithm aversion in staff and customers. Solving algorithm aversion is a key to successful digital transformation. Until organizations overcome the problem of algorithm aversion, they will underperform their peers.

Augmented Humans

If people’s aversion to imperfect algorithms is driven by an intolerance to inevitable errors, then maybe they will be more open to using algorithms if they are given the opportunity to eliminate or reduce an AI system’s errors.

A carefully crafted combination of human and algorithm decisions can outperform their individual contributions to a decision. For example, one study showed that the best mammogram cancer screening accuracy was achieved by combining the results of a radiologist with an AI cancer detection algorithm. The combination of human and AI outperformed a radiologist with a second opinion from another radiologist.

However, studies show that people’s attempts to adjust algorithmic forecasts often make the result worse. But the good news is that when people adjust an algorithm, they usually achieve better results than purely human decisions.

What if the benefits associated with getting people to use an algorithm outweigh the costs associated with degrading the algorithm’s performance? What is the optimal balance between algorithm performance versus humans’ algorithm aversion?

In their paper, Overcoming Algorithm Aversion: People Will Use Imperfect Algorithms If They Can (Even Slightly) Modify Them, researchers reported the results of experiments that asked three questions:

- Will people use an algorithm more if they are able to adjust its results?

- How sensitive are people to limits on the magnitude of the adjustments they can make?

- Are people more satisfied with the accuracy of an algorithm that they adjusted?

The answer to all three questions was positive. People will choose to use an imperfect algorithm’s forecasts substantially more often when they can modify those forecasts, even if they are able to make only small adjustments to those forecasts. They are insensitive to how little they are allowed to adjust an imperfect algorithm’s forecasts or to whether there are any limits. And finally, people who have the ability to adjust an algorithm’s outputs believe it performs better than those who do not. Constraining the amount by which people can adjust an algorithm’s forecasts leads to better performance.

These findings have important implications for those pursuing organizational change and trying to increase employees’ and customers’ use of algorithms. There are substantial benefits to allowing people to modify an algorithm’s outputs. The researchers concluded that “It increases their satisfaction with the process, their confidence in and perceptions of the model relative to themselves, and their use of the model on subsequent forecasts.” Conversely, you should avoid designing processes in which a human must passively accept an AI decision.

Humans and AI Best Practices

AI success requires people, process, and technology. You need a human-centric AI success plan. Start by understanding the needs and very human behaviors of the staff and customers who will be interacting with your AI system. Design processes where humans are augmented, not controlled and where people can influence outcomes and make choices even with a limited set of options. By respecting human dignity and empowering people to make their own choices, you will have a smoother path to organizational change, more accurate decisions, and more successful business outcomes.

Choose modern AI systems that are able to intuitively explain their decisions. Show end-users personalized what-if scenarios that demonstrate how changing their behavior and their choices changes the decisions that the AI makes.

About the author

VP, AI Strategy, DataRobot

Colin Priest is the VP of AI Strategy for DataRobot, where he advises businesses on how to build business cases and successfully manage data science projects. Colin has held a number of CEO and general management roles, where he has championed data science initiatives in financial services, healthcare, security, oil and gas, government and marketing. Colin is a firm believer in data-based decision making and applying automation to improve customer experience. He is passionate about the science of healthcare and does pro-bono work to support cancer research.

Meet Colin Priest

[ad_2]

Source link