[ad_1]

Evaluating bias is an important part of developing a model. Deploying a model that’s biased can lead to unfair outcomes for individuals and repercussions for organizations. DataRobot offers robust tools to test if your models are behaving in a biased manner and diagnose the root cause of biased behavior. However, this is only part of the story. Just because your model was bias-free at the time of training doesn’t mean biased behavior won’t emerge over time. To that end, we’ve extended our Bias and Fairness capabilities to include bias monitoring in our MLOps platform. In this post, we’ll walk you through an example of how to use DataRobot to monitor a deployed model for biased behavior.

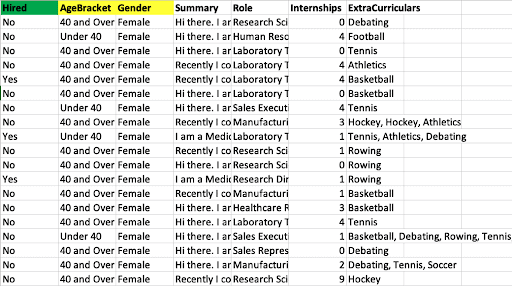

The Data

We’ll be using a dataset that contains job applications and training a model to predict if the candidates were hired or not. The green column is our target, and the yellow columns are Protected Features, or features we want to examine for model bias.

Checking Models for Bias

After the models have been built, we can begin evaluating them using the Bias and Fairness insight.

The Per-Class Bias tool tells us how each class within a Protected Feature performs on a number of different Fairness Metrics. The example above is using the Proportional Parity metric, which looks at the percentage of records in each class that receives the favorable outcome. In this case, the favorable outcome is the target class “Yes,” meaning someone was hired. The full setup of the Bias and Fairness tool can be viewed in this video.

The feature “Gender” is selected, and we can see that the chart is displaying the computed Proportional Parity scores for men and women. Both of them have approximately the same score, meaning this model isn’t biased in terms of gender. However, the feature “AgeBracket” has a notification next to it notifying us that biased behavior has been detected. If we were to select this feature, we could similarly dive into it to understand the extent of the bias.

Now that we’ve examined our model, we’re ready to deploy it.

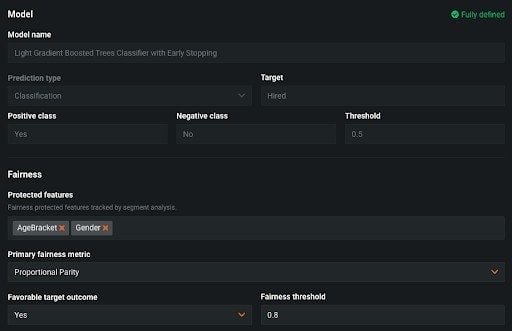

Deploying Models with Bias Monitoring

We start by selecting the Protected Features we want to monitor for bias. In many countries and industries, these features are protected by law and often include gender, age, and race. In the above example, we’ve selected “AgeBracket,” which denotes whether a particular applicant is above or below 40 years old, and “Gender.”

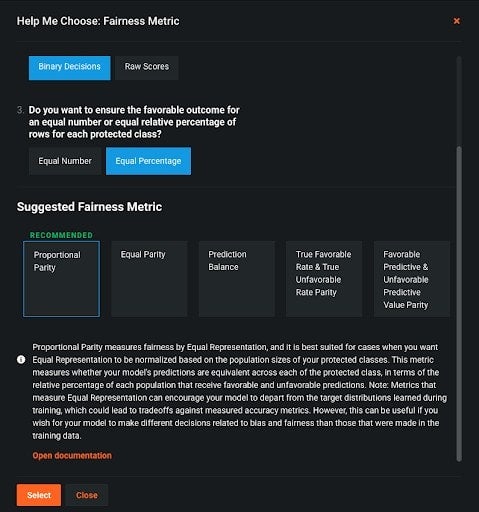

Next we need to choose the Fairness Metric. There are many different ways to measure bias in deployed models. Deciding which one to use can depend on your use case. In some cases you might be interested in the proportion of classes that received a certain outcome, and in other cases you might be interested in the model’s error rate across those classes. DataRobot offers five industry standard Fairness Metrics to measure bias and provides in-depth descriptions of each. If you’re unsure which one to use, there’s a helpful workflow to help guide you toward an appropriate metric. Like when we trained the model, we’ve again selected Proportional Parity to monitor the deployed model.

To finish things, we’ll choose which class of the target leads to a favorable outcome. As when we trained the model, it’s again “Yes,” meaning someone was hired. Now we’re ready to deploy the model.

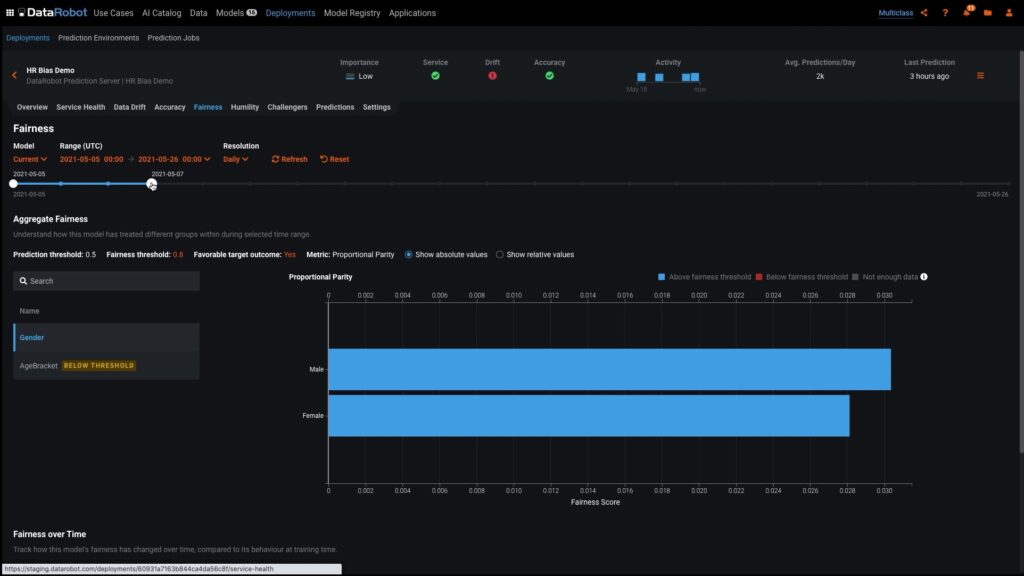

Monitoring Deployed Models for Bias

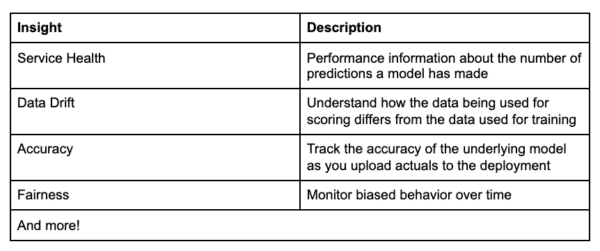

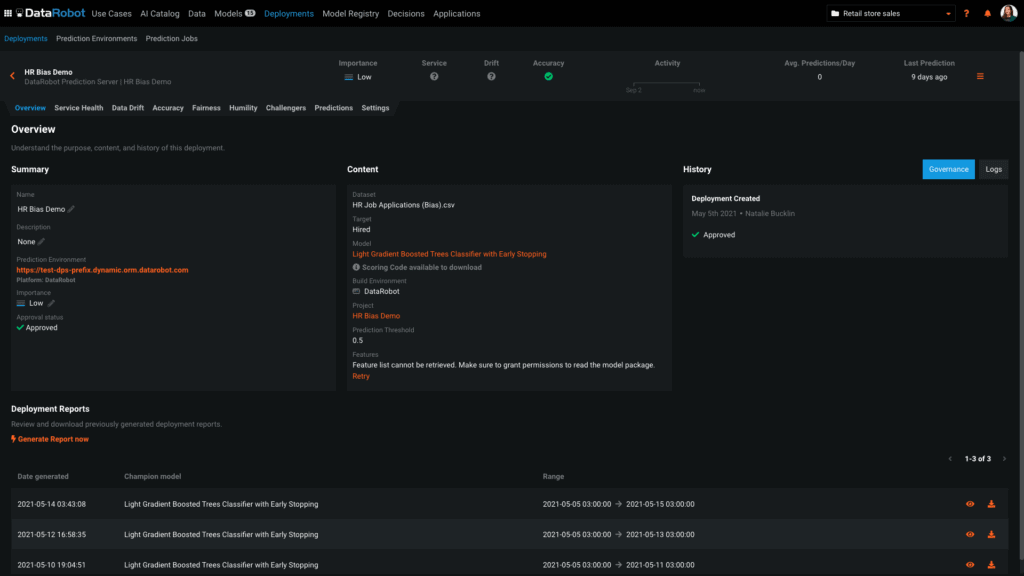

The screenshot below shows the Overview page of our newly deployed model (referred to as a deployment) in DataRobot’s MLOps platform. From here we can access the following insights:

As the Activity bar shows, this deployment has been making predictions for some time. We’ve also been uploading the actuals to the deployment, which are the actual results of a record after a prediction has been made. This is required for the Fairness insight, which allows us to monitor bias over time, and also enables other insights, such as Accuracy.

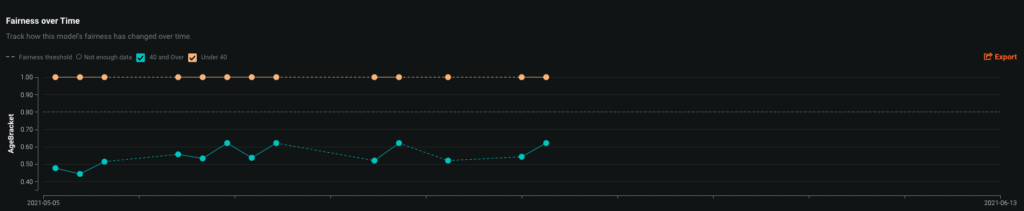

The tools in the Fairness insight function similarly to the Bias and Fairness insight when we developed the model. Using the Aggregate Fairness tool, we’re able to see how each of the classes within a Protected Feature compare on different Fairness Metrics. Because we’ve been using this deployment to score data and upload the actuals, we’re able to get a historical view of how the Fairness Metrics have changed over time. This is reflected in the ability to drag a slider and select our desired time period. In the screenshot below, we’ve selected the Protected Feature “Gender.” We can see that when the deployment was first created, it wasn’t behaving in a biased manner as evidenced by men and women having relatively the same score. But as we drag the slider further to the right, the scores become unbalanced, and the bar representing women is shaded red. This means the deployment has become biased towards women and is treating them unfairly.

The Fairness Over Time tool gives us a single historical view of the Fairness Metrics for a Protected Feature over an extended period of time. As the Aggregate Fairness tool showed us, the scores for men and women were initially quite similar. But over time they began to diverge, leading the deployment to become biased.

Understanding Why Bias Emerged

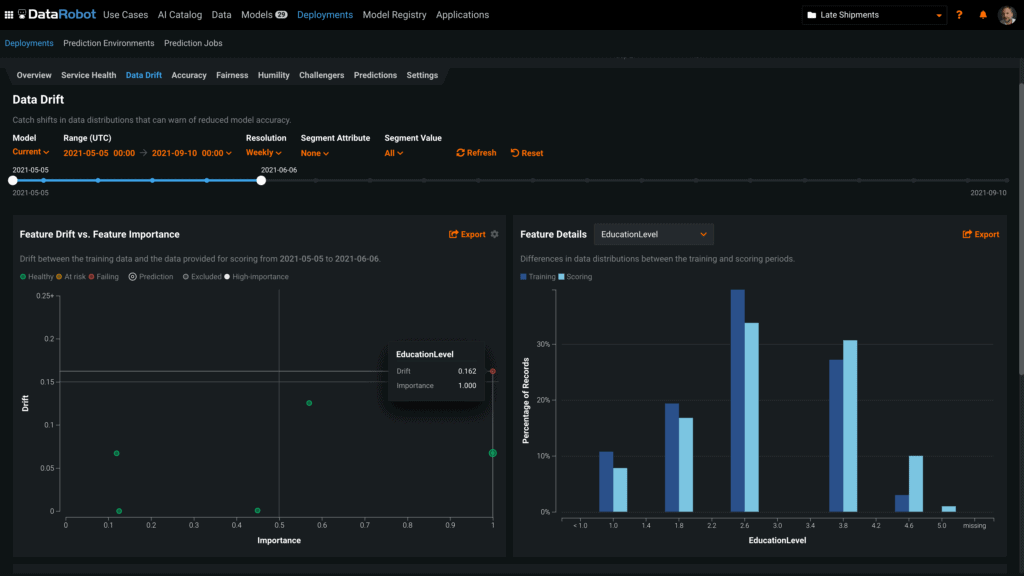

Now that we’ve seen the deployment has become biased, the next step is to understand why. Using the Data Drift insight of MLOps, we can see how the data being used for scoring differs from the data used for training. Data Drift in the deployment’s features could explain the emergence of bias.

Looking at the Feature Drift versus Feature Importance tool shows us the extent to which these features have drifted and plotted against their importance. Features that have drifted a lot and that are very important (features in the top right quadrant), could contribute to biased behavior. The feature “EducationLevel” meets this criteria and has been selected. Looking at the Feature Details tool, we can see that lower levels of education were more prevalent in the training data and higher levels of education more frequently appear in the scoring data. This difference could be one insight in the emergence of bias over time.

Ensure Fair and Unbiased AI Models in Production

Monitoring biased behavior is something that needs to be done throughout the lifecycle of a model. Although a model may be bias-free when initially deployed, biased behavior can emerge over time as the model makes predictions. DataRobot’s Bias Monitoring capabilities ensure that you can discover if a model becomes biased and diagnose the cause of biased behavior.

Bias Monitoring is a new functionality of the MLOps platform in the 7.2 Release. Ensure trust in your production models with bias monitoring. Check out the demo recording or request a live demo.

About the author

Data Scientist and Product Manager

Natalie Bucklin is the Product Manager of Trusted and Explainable AI. She is passionate about ensuring trust and transparency in AI systems. In addition to her role at DataRobot, Natalie serves on the Board of Directors for a local nonprofit in her home of Washington, DC. Prior to joining DataRobot, she was a manager for IBM’s Advanced Analytics practice. Natalie holds a MS from Carnegie Mellon University.

Meet Natalie Bucklin

[ad_2]

Source link