[ad_1]

Introduction

If you take a look at the literature and publications about Artificial Intelligence since the 21st century, you can’t ignore Deep Learning. It is a subset of Machine Learning that involves learning a hierarchy of features to gain meaningful insights from a complex input space.

While people are often excited to use deep learning, they can quickly get discouraged by the difficulty of implementing their own deep networks. Especially in the industry, developing processes for integrating deep learning within an organization’s existing computational infrastructure remains a challenge. Many organizations are increasingly realizing that the portion of deep learning code in a real-world system is a lot smaller than the infrastructure needed for its support.

As projects move from small-scale research to large-scale deployment, your organization will certainly need a lot of resources to support inferences, distributed training, data pipelines, model monitoring… Small companies, however, struggle to compete against large companies that have a huge amount of resources to invest in the development of deep learning workflow. Luckily, there are some universal best practices for achieving successful deep learning model rollout for a company of any size and means.

Define The Business Problem

The first and most important step to running a successful deep learning project is to define a business problem. Without this, a project simply cannot exist. To hone in on a business use case, you must start with a list of critical business issues from which to choose, by possibly soliciting feedback and ideas from teams across the company.

Let’s use this invoice digitization as an example. You should be able to answer these questions:

- What is the current process? The process of reviewing invoices has evolved a lot over time, from manual reviewing to invoice scanning. Digitizing invoices involves a lot of human moderated steps, so we can definitely use technology to make this process better.

- Would the use of deep learning techniques specifically help with this business issue, and if so, how? Deep learning approaches have seen advancement in the particular problem of reading the text and extracting structured and unstructured information from images. By merging existing deep learning methods with optical character recognition (OCR) technology, companies and individuals have been able to automate the process of digitizing documents and enabled easier manual data entry procedures, better logging and storage, lower errors and better response times.

- Do we have the appropriate amount of data? When choosing a deep learning use case, you’ll want to pick one for which data is already available. A requirement to collect data before beginning a project is not ideal since it will significantly prolong the process. In our use case, there are datasets available for OCR for tasks like number plate recognition or handwriting recognition but these datasets are hardly enough to get the kind of accuracy an insurance claims processing or a vendor repayment assignment would require.

- Are we willing to work on this problem with an external partner? Because you’ll be working on the project with someone, the problem selected should be one that you’re actually willing to have involvement from a 3rd party. Note that if building an in-house solution is the approach you choose, the costs of building it must be compensated by an increased amount of customers signing up, an increased rate of processing invoices and a decrease in the number of manual reviewers required.

- Will this project help me make or save money? If the answer is “maybe” or “no,” then scratch it off the list. The project should focus on opportunities with real and measurable results. For invoice digitization, here are a couple of benefits: automating processes, increasing efficiency, reducing costs and storage, increasing customer satisfaction, and reducing ecological footprint.

Calculate the Return-On-Investment

There are many challenges in calculating the ROI of a deep learning project. One is that it’s often difficult to isolate the contributions of it alone to improvements, especially towards larger business outcomes. Furthermore, the calculation is often complicated because the value isn’t all in one number and can spread across multiple departments and teams. Thus, the biggest part of the work here is often determining all the possible ways that deep learning project could be bringing success.

The list of factors is different for each business, but the universal contributions are:

- Saving Time: This is very easy to quantify. The time saved through deep learning projects can be re-invested into your organizations for other initiatives.

- Saving Money: This value is entirely dependent on the individual project. Deep learning can improve accuracy and efficiency across industries. For a predictive maintenance use case, deep learning can save money from customer churn rates and human effort for maintenance. For a recommendation engine use case, deep learning can increase customer engagement and brand loyalty. For a customer service chatbot use case, deep learning can decrease support operating costs and improve customer experience.

- Allowing For Scalability: Internal IT efforts to package data and maintain models for the deep learning engineers can be costly if they are hard to access and too big to handle. This is a key benefit of deep learning platforms since your engineers can then access the data pipeline and make changes when needed. This would eventually allow your organization to scale faster without having to worry about infrastructure burden.

- Improving Customer Experience: When the project demonstrates your organization’s commitment to the customers, they are more likely to choose you over other competitors, giving you an edge. Additionally, customers are less likely to churn when their experience is tailored to their individual needs. Deep learning can make this sort of of customer experience without radically increased human costs.

In order to increase the ROI for your project, your goal is to set clear KPIs that are put in place and can be tracked over time, for example: (1) the number of projects delivered per month, (2) the time from start to prototype to production, and (3) the ratio of projects deployed to production over project requests from clients.

Focus on Data Quality and Quantity

It’s very important to invest in pre-processing your data before feeding them into the deep learning model. Real-world data are generally incomplete (lacking attribute values, lacking certain attributes of interest, or containing only aggregate data), noisy (containing errors or outliers), and inconsistent (containing discrepancies in codes or names). A couple of good pre-processing practices include cleaning (fill in missing values, smooth noisy data, identify or remove outliers, and resolve inconsistencies), integration (using multiple databases, data cubes, or files), transformation (normalization and aggregation), reduction (reducing the volume but producing the same or similar analytical results), and discretization (replacing numerical attributes with nominal ones).

On the quantity side, when deploying your deep learning model in a real-world application, you should constantly feed more data to the model to continue improving its performance. But it’s not easy to collect well-annotated data, since the process can be time-consuming and expensive. Hiring people to manually collect raw data and label them is not efficient at all.

One suggestion that allows you to save both time and money is that you can train your deep learning model on large-scale open-source datasets, and then fine-tune it on your own data. For example, the Open Images Dataset from Google has close to 16 million images labelled with bounding boxes from 600 categories. Your model being trained on this data is likely to be already sufficient at detecting objects, so now you only have to classify the objects in the boxes.

The approach above works if the use case is similar to the kind of tasks that the big open-source projects cater to. In many real-world situations, it requires an almost 100% accuracy (for example, fraud detection, self-driving vehicles) which is impossible to do with just open-source datasets. To deal with this, you can get more data by leveraging data augmentation, making minor alterations to existing data to generate synthetically modified data. By performing augmentation, you can actually prevent your neural network from learning irrelevant patterns and boost its overall performance. A couple of popular augmentation techniques for images data include flipping images horizontally and vertically, rotating the images by some degrees, scaling the images outward or inward by some ratios, cropping the original images, translating the images along some directions, and adding Gaussian noise to your images. This NanoNets article provides a detailed review on several of these approaches, as well as more advanced ones such as generative models.

Tackle Data Annotation

As you saw the importance of data augmentation, an often overlooked component in building a strong data stack is the process by which data is annotated, which needs to be robust for that purpose. Annotation is the process of adding contextual information or labels to images, which serve as training examples for algorithms to learn how to identify various features automatically. Depending on your dataset, annotations can be generated internally or outsourced to third-party service providers.

You can try out third-party platforms which offer image annotation services. All you have to do is give them a description of what kind of data and annotations that you need. Some of the well-known platforms are Alegion, Figure Eight, and Scale. Their solutions help different industries such as autonomous vehicles, drones, robotics, AR/VR, retail etc. to deal with various data labeling and data annotation tasks.

The previous section reveals a couple of third-party image annotation services already, so here I’ll look at best practices to do insource annotations, aka building your annotation infrastructure:

- It is important to invest in formal and standardized training and education to help drive quality and consistency across different datasets and annotators. Skipping this step can lead to ambiguity and, as a result, a lot of data that needs to be re-annotated later on, especially for data which requires a specific type of expertise or which has a tendency to include any subjectivity.

- It is also important to build out a quality control process, which ensures that annotation outputs are validated by other people to drive better quality data. This process is achieved sequentially, after the initial annotation process where data is stochastically sent through for quality control.

- Lastly, it is crucial to incorporate analytics to manage the annotation process in a data-driven manner, particularly given its operational nature. This data can be used to manage the process and measure how your team are doing against targets which annotators are over or under-performing.

Now that you have built an annotation infrastructure, how do you enable annotators to output data more efficiently?

- In the early stages of annotating your models, it often makes sense to use more traditional approaches (classical computer vision techniques) to serve as a base for your annotations. These approaches can significantly reduce annotation time and improve overall performance.

- The annotation user interface is very important. A well thought-out, simple interface goes a long way in driving consistent and high-quality annotations from your users.

- Finally, generating synthetic data can also help maximize the output of a single annotation, as it would form an annotation for each corresponding generated dataset.

Overall, the capacity to efficiently generate clean, annotated datasets at scale is absolutely a core value-driver for your business.

Assemble The Team

Today’s deep learning teams consist of people with different skill sets. There are a bunch of different roles that are needed when you start building production-level deep learning solutions. Implementing machine learning requires very complex coordination across multiple teams and can only be done by the best Product Managers. Here are the various individuals/teams required to get ML in the hands of users:

- Data Scientist who explores and tries to understand correlations in the gathered data. This person understands well how statistics and deep learning works while maintaining a decent programming chop.

- Deep Learning Engineer who transforms deep learning models created by the data scientist for production. This person excels in writing maintainable code and has a good understanding of how deep learning works.

- Data Engineer who takes care of collecting the data and making it available for the rest of the team. This person knows how to plan maintainable data pipelines and manages how the data is tested.

- Data Annotator who label and annotate training data.

- Designer who designs the user experience and interaction.

- Quality Assurance Engineer who tests the algorithms and checks for failure cases. This person should be good at best practices in test-driven development.

- Operations Manager who integrates deep learning into the business process. This person understands what are the internal/complex processes of the organization where cheap prediction is valuable, thus can benefit from deep learning.

- Distribution Managers (usually from the Sales/Marketing department) who communicate to clients the value of deep learning. This person must provide domain-specific knowledge.

Write Production-Ready Code

Since a lot of data scientists who are in charge of building deep learning models don’t come from a software engineering background, the quality of that code can vary a lot, causing issues with reproducibility and maintainability later down the line. Therefore, a best practice in this aspect is to write production-ready code, those that gets read and executed by many other people, instead of just the person who wrote it. In particular, production- ready code must be (1) reproducible, (2) modular, and (3) well-documented. These are challenges the software engineering world has already tackled, so let’s dive into some approaches that can give you an immediate positive impact on the quality of your deep learning project.

To make your code reproducible, you should definitely version control your codebase with “git” and attempt to push code commits frequently. It is important to version your datasets your models get trained on as well so you can track the performance of models as it varies with the data you train it with. You should also use a virtual environment such as “virtualenv” or “conda”, which take a config file that contains a list of the packages used in the code. You can distribute this file across your team via version control to ensure that your colleagues are working in the same environment. Lastly, you should use a proper IDE like PyCharm or Visual Studio Code when programming, instead of Jupyter Notebooks. Notebooks are great for initial prototyping, but not good for a reproducible workflow.

To make your code modular, you need to have a pipeline framework that handles data engineering and modeling workflows. Some good choices are Luigi and Apache Airflow, which let you build the workflow as a series of nodes in a graph, and gives you dependency management and workflow execution. You would want to write unit tests for your codebase to ensure everything behaves as expected, which is important in deep learning as you are dealing with black-box algorithms. Finally, you should consider adding continuous integration to your repo, which can be used to run your unit tests or pipeline after every commit or merge, making sure that no change to the codebase breaks it.

To keep your code well-documented, you would want to organize your code in a standard structure to make it easy for your colleagues to understand your codebase. The template from Cookiecutter is my favorite. Then you should choose a coding style convention (such as PEP8) and enforce it with a pre-commit linter (such as yapf). If you don’t want to write the documentation yourself, use Sphinx to create docs for your project.

Track Model Experiments

Most companies today are able to systematically store only the version of code, usually via version control system like Git. For each deep learning experiment, your organization should have a systematic approach for storing the following:

- Training Code: You can simply use a regular software development version control such as Git to store the exact version of code used for running a particular experiment.

- Model Parameters: Storing parameters and being able to see their values for a particular model over time will help you gain insights and intuition on how the parameter space affects your model. This is very critical when growing your team since new employees will be able to see how a particular model has been trained in the past.

- Datasets: Versioning and storing the datasets is very important for reproducibility and compliance purposes. As explainability becomes more and more difficult when dealing with deep learning, a good way to start understanding the models is to look at the training data. Good dataset versioning will help you when facing the privacy laws like GDPR or possible future legal queries like model biases.

- Hardware: If you use more powerful cloud hardware, it is important to get easy visibility to hardware usage statistics. It should be easy to see the GPU usage and memory usage of machines without having to manually run checks and monitoring. This will optimize the usage of machines and help debug bottlenecks.

- Environment: Package management is difficult but completely mandatory to make code easy to run. Container-based tools like Docker allow you to quickly speed up collaboration around projects.

- Cost: The cost of experiments is important in assessing and budgeting machine learning development.

- The Model: Model versioning means a good way to store the outputs of your code.

- Logs: Training execution time logs are essential for debugging use cases but they can also provide important information on keeping track of your key metrics like accuracy or training speed and estimated time to completion.

- Results: It should be trivial to see the performance and key results of any training experiment that you have run.

By systematic, I mean an approach where you are actually able to take a model in production and look at the version of code used to come up with that model. This should be done for every single experiment, regardless of whether they are in production or not.

On-Premise v/s Cloud Infrastructure

Cloud infrastructure includes the traditional provider such as AWS, GCP, Microsoft Azure; as well as ML-specific provider such as Paperspace and FloydHub. On-Premise infrastructure includes pre-built deep learning servers such as Nvidia workstations and Lambda Labs; as well as built-from-scratch deep learning workstations (check out resources such as this and this).

- The key benefits of choosing the cloud option includes a low financial barrier to entry, available support from the providers, and the ability to scale alongside the different-sized computing cluster.

- On the other hand, investing in on-premise systems lets you iterate and try different experiments for as much time and as many projects as the hardware can handle, without considering the cost.

Ultimately, choosing between these two options really depends on the use case at hand and the kind of data involved. Most likely, your organization will need to move from one environment to another at different points in the project workflow from initial experiment to large-scale deployment.

When you’re just getting started, a cloud provider, especially an ML-specific one, makes sense. With little upfront investment, both in dollars and time, you can get your first model training on a GPU. However, as your team grows and your number of models increases, the cost savings from going on-prem can reach almost 10X over the big public clouds. Add to that the increased flexibility in what you can build and better data security, it is clear that on-premise solutions are the right choice to do your deep learning at scale.

Enable Distributed Training

One of the major reasons for the sudden boost in deep learning’s popularity has to do with massive computational power. Deep learning requires training neural networks with a massive number of parameters on a huge amount of data. Distributed computing, therefore, is a perfect tool to take advantage of modern hardware to its fullest. If you’re not familiar with distributed computing, it’s a way to program software that uses several distinct components connected over a network.

Distributed training of neural networks can be approached in 2 ways: (1) data parallelism and (2) model parallelism.

- Data parallelism attempts to divide the dataset equally onto the nodes of the system, where each node has a copy of the neural network along with its local weights. Each node operates on a unique subset of the dataset and updates its local set of weights. These local weights are shared across the cluster to compute a new global set of weights through an accumulation algorithm. These global weights are then distributed to all the nodes, and the processing of the next batch of data comes next.

- Model parallelism attempts to distribute training by splitting the architecture of the model onto separate nodes. It is applicable when the model architecture is too big to fit on a single machine and the model has some parts that can be parallelized. Generally, most networks can fit on 2 GPUs, which limit the amount of scalability that can be achieved.

Practically, data parallelism is more popular and frequently employed in large organizations for executing production-level deep learning algorithms. Below are a couple of good frameworks that allow you to do data parallelism at scale:

- MXNet – A lightweight, portable, and flexible distributed deep learning for Python, R, Julia, Go, Javascript and more.

- deeplearning4j – A distributed deep learning platform for Java, Clojure, Scala.

- Distributed Machine learning Tool Kit (DMTK) – A distributed machine learning framework (created by Microsoft) that allows you to do topic modelling, word embedding, and gradient boosting tree.

- Elephas – An extension of Keras, which allows you to run distributed deep learning models at scale with Spark.

- Horovod – A distributed training framework for TensorFlow (created by Uber).

Deploy Models In The Wild

Deep learning projects should not happen in a vacuum; thus it’s critical to actually integrate the model into the operations of the company. Going back to section 1, the goal of a deep learning project is to deliver business insights after all. This means the model will need to go into production and does not get stuck in a prototyping or sandbox phase.

One of the biggest underrated challenges in machine learning development is the deployment of the trained models in production that too in a scalable way. One of the best tools that can address this problem is Docker, a containerization platform which packages an application & all its dependencies into a container. Docker is used when you have many services which work in an isolated manner and serve as a data provider to a web application. Depending on the load, the instances can be spun off on demand on the basis of the rules set up.

Kubernetes Engine is another great place to run your workloads at scale. Before being able to use Kubernetes, you need to containerize your applications. You can run most applications in a Docker container without too much hassle. However, effectively running those containers in production and streamlining the build process is another story. Below are solid practices, according to Google Cloud, to help you effectively build containers:

- Package a single application per container: It’s harder to debug when there are more than one application running inside a container.

- Optimize for the Docker build cache: Docker can cache layers of your images to accelerate later builds. This is a very useful feature, but it introduces some behaviors that you need to take into account when writing your Dockerfiles. For example, you should add the source code of your application as late as possible in your Dockerfile so that the base image and your application’s dependencies get cached and aren’t rebuilt on every build.

- Remove unnecessary tools: This help you reduce the attach surface of your host system.

- Build the smallest image possible: This decreases download times, cold start times, and disk usage. You can use several strategies to achieve that: start with a minimal base image, leverage common layers between images and make use of Docker’s multi-stage build feature.

- Consider whether to use a public image: Using public images can be a great way to start working with a particular piece of software. However, using them in production can come with a set of challenges, especially in a high-constraint environment. You might need to control what’s inside them, or you might not want to depend on an external repository, for example. On the other hand, building your own images for every piece of software you use is not trivial, particularly because you need to keep up with the security updates of the upstream software. Carefully weigh the pros and cons of each for your particular use-case, and make a conscious decision.

In broad, containers are great to use to make sure that your analyses and models are reproducible across different environments. While containers are useful for keeping dependencies clean on a single machine, the main benefit is that they enable data scientists to write model endpoints without worrying about how the container will be hosted. This separation of concerns makes it easier to partner with engineering teams to deploy models to production. Additionally, using Docker and/or Kubernetes, the data scientists can also own the deployment of models to production.

There’s of course a better, much simpler and more intuitive way to perform OCR tasks.

Deep Learning with Nanonets

The Nanonets API allows you to build OCR models with ease.

You can upload your data, annotate it, set the model to train and wait for getting predictions through a browser based UI without writing a single line of code, worrying about GPUs or finding the right architectures for your deep learning models. You can also acquire the JSON responses of each prediction to integrate it with your own systems and build machine learning powered apps built on state of the art algorithms and a strong infrastructure.

Using the GUI: https://app.nanonets.com/

Here’s how you can also use the Nanonets OCR API by following the steps below:

Step 1: Clone the Repo, Install dependencies

git clone https://github.com/NanoNets/nanonets-ocr-sample-python.git

cd nanonets-ocr-sample-python

sudo pip install requests tqdm

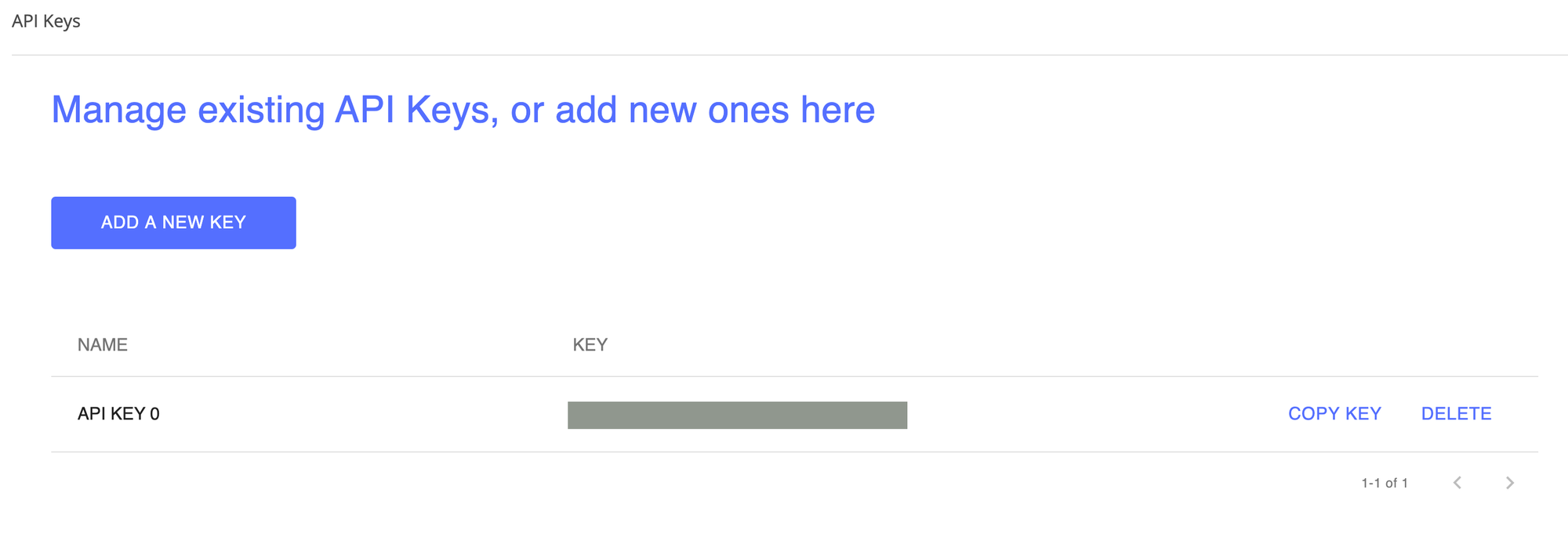

Step 2: Get your free API Key

Get your free API Key from http://app.nanonets.com/#/keys

Step 3: Set the API key as an Environment Variable

export NANONETS_API_KEY=YOUR_API_KEY_GOES_HERE

Step 4: Create a New Model

python ./code/create-model.py

Note: This generates a MODEL_ID that you need for the next step

Step 5: Add Model Id as Environment Variable

export NANONETS_MODEL_ID=YOUR_MODEL_ID

Note: you will get YOUR_MODEL_ID from the previous step

Step 6: Upload the Training Data

The training data is found in images (image files) and annotations (annotations for the image files)

python ./code/upload-training.py

Step 7: Train Model

Once the Images have been uploaded, begin training the Model

python ./code/train-model.py

Step 8: Get Model State

The model takes ~2 hours to train. You will get an email once the model is trained. In the meanwhile you check the state of the model

python ./code/model-state.py

Step 9: Make Prediction

Once the model is trained. You can make predictions using the model

python ./code/prediction.py ./images/151.jpg

Nanonets and Humans in the Loop

The ‘Moderate’ screen aids the correction and entry processes and reduce the manual reviewer’s workload by almost 90% and reduce the costs by 50% for the organisation.

Features include

- Track predictions which are correct

- Track which ones are wrong

- Make corrections to the inaccurate ones

- Delete the ones that are wrong

- Fill in the missing predictions

- Filter images with date ranges

- Get counts of moderated images against the ones not moderated

All the fields are structured into an easy to use GUI which allows the user to take advantage of the OCR technology and assist in making it better as they go, without having to type any code or understand how the technology works.

Conclusion

By forgetting any of these 10 practices, you risk frustrating the involved stakeholders for a negative perspective of the deep learning project in action. Furthermore, you might also risk evaluating the results of an incomplete effort that was doomed to failure from the start, thus wasting a lot of time and money. Adhering to these practices will allow your organization to move a deep learning proof-of-concept into production quickly and lead to more time and money being saved.

You might be interested in our latest posts on:

Start using Nanonets for Automation

Try out the model or request a demo today!

TRY NOW

[ad_2]

Source link