[ad_1]

The intelligence demonstrated by machines is known as Artificial Intelligence. Artificial Intelligence has grown to be very popular in today’s world. It is the simulation of natural intelligence in machines that are programmed to learn and mimic the actions of humans. These machines are able to learn with experience and perform human-like tasks. As technologies such as AI continue to grow, they will have a great impact on our quality of life.

Table of Content

- Introduction to Artificial Intelligence?

- How Artificial Intelligence Works?

- What are the types of Artificial Intelligence?

- Where is AI Used?

- What are the Prerequisites for Artificial Intelligence?

- Applications of Artificial Intelligence in business?

- Everyday Applications of Artificial Intelligence

- Artificial Intelligence Jobs

- Career Trends in AI

- Future of Artificial Intelligence

- Artificial Intelligence Movies

Introduction to Artificial Intelligence

The short answer to What is Artificial Intelligence is that it depends on who you ask.

A layman with a fleeting understanding of technology would link it to robots. They’d say Artificial Intelligence is a terminator like-figure that can act and think on its own.

If you ask about artificial intelligence to an AI researcher, (s)he would say that it’s a set of algorithms that can produce results without having to be explicitly instructed to do so. And they would all be right. So to summarise, Artificial Intelligence meaning is:

Artificial Intelligence Definition

- An intelligent entity created by humans.

- Capable of performing tasks intelligently without being explicitly instructed.

- Capable of thinking and acting rationally and humanely.

How do we measure if Artificial Intelligence is acting like a human?

Even if we reach that state where an AI can behave as a human does, how can we be sure it can continue to behave that way? We can base the human-likeness of an AI entity with the:

- Turing Test

- The Cognitive Modelling Approach

- The Law of Thought Approach

- The Rational Agent Approach

Let’s take a detailed look at how these approaches perform:

What is the Turing Test in Artificial Intelligence?

The basis of the Turing Test is that the Artificial Intelligence entity should be able to hold a conversation with a human agent. The human agent ideally should not able to conclude that they are talking to an Artificial Intelligence. To achieve these ends, the AI needs to possess these qualities:

- Natural Language Processing to communicate successfully.

- Knowledge Representation to act as its memory.

- Automated Reasoning to use the stored information to answer questions and draw new conclusions.

- Machine Learning to detect patterns and adapt to new circumstances.

Cognitive Modelling Approach

As the name suggests, this approach tries to build an Artificial Intelligence model-based on Human Cognition. To distil the essence of the human mind, there are 3 approaches:

- Introspection: observing our thoughts, and building a model based on that

- Psychological Experiments: conducting experiments on humans and observing their behaviour

- Brain Imaging: Using MRI to observe how the brain functions in different scenarios and replicating that through code.

The Laws of Thought Approach

The Laws of Thought are a large list of logical statements that govern the operation of our mind. The same laws can be codified and applied to artificial intelligence algorithms. The issues with this approach, because solving a problem in principle (strictly according to the laws of thought) and solving them in practice can be quite different, requiring contextual nuances to apply. Also, there are some actions that we take without being 100% certain of an outcome that an algorithm might not be able to replicate if there are too many parameters.

The Rational Agent Approach

A rational agent acts to achieve the best possible outcome in its present circumstances.

According to the Laws of Thought approach, an entity must behave according to the logical statements. But there are some instances, where there is no logical right thing to do, with multiple outcomes involving different outcomes and corresponding compromises. The rational agent approach tries to make the best possible choice in the current circumstances. It means that it’s a much more dynamic and adaptable agent.

Now that we understand how Artificial Intelligence can be designed to act like a human, let’s take a look at how these systems are built.

How Artificial Intelligence (AI) Works?

Building an AI system is a careful process of reverse-engineering human traits and capabilities in a machine, and using it’s computational prowess to surpass what we are capable of.

To understand How Aritificial Intelligence actually works, one needs to deep dive into the various sub domains of Artificial Intelligence and and understand how those domains could be applied into the various fields of the industry.

- Machine Learning : ML teaches a machine how to make inferences and decisions based on past experience. It identifies patterns, analyses past data to infer the meaning of these data points to reach a possible conclusion without having to involve human experience. This automation to reach conclusions by evaluating data, saves a human time for businesses and helps them make a better decision.

- Deep Learning : Deep Learning ia an ML technique. It teaches a machine to process inputs through layers in order to classify, infer and predict the outcome.

- Neural Networks : Neural Networks work on the similar principles as of Human Neural cells. They are a series of algorithms that captures the relationship between various underying variabes and processes the data as a human brain does.

- Natural Language Processingc: NLP is a science of reading, understanding, interpreting a language by a machine. Once a machine understands what the user intends to communicate, it responds accordingly.

- Computer Vision : Computer vision algorithms tries to understand an image by breaking down an image and studying different parts of the objects. This helps the machine classify and learn from a set of images, to make a better output decision based on previous observations.

- Cognitive Computing : Cognitive computing algorithms try to mimic a human brain by anaysing text/speech/images/objects in a manner that a human does and tries to give the desired output.

Artificial Intelligence can be built over a diverse set of components and will function as an amalgamation of:

- Philosophy

- Mathematics

- Economics

- Neuroscience

- Psychology

- Computer Engineering

- Control Theory and Cybernetics

- Linguistics

Let’s take a detailed look at each of these components.

Philosophy

The purpose of philosophy for humans is to help us understand our actions, their consequences, and how we can make better decisions. Modern intelligent systems can be built by following the different approaches of philosophy that will enable these systems to make the right decisions, mirroring the way that an ideal human being would think and behave. Philosophy would help these machines think and understand about the nature of knowledge itself. It would also help them make the connection between knowledge and action through goal-based analysis to achieve desirable outcomes.

Also Read: Artificial Intelligence vs Human Intelligence

Mathematics

Mathematics is the language of the universe and system built to solve universal problems would need to be proficient in it. For machines to understand logic, computation, and probability are necessary.

The earliest algorithms were just mathematical pathways to make calculations easy, soon to be followed by theorems, hypotheses and more, which all followed a pre-defined logic to arrive at a computational output. The third mathematical application, probability, makes for accurate predictions of future outcomes on which Artificial Intelligence algorithms would base their decision-making.

Economics

Economics is the study of how people make choices according to their preferred outcomes. It’s not just about money, although money the medium of people’s preferences being manifested into the real world. There are many important concepts in economics, such as Design Theory, operations research and Markov decision processes. They all have contributed to our understanding of ‘rational agents’ and laws of thought, by using mathematics to show how these decisions are being made at large scales along with their collective outcomes are. These types of decision-theoretic techniques help build these intelligent systems.

Neuroscience

Since neuroscience studies how the brain functions and Artificial Intelligence is trying to replicate the same, there’s an obvious overlap here. The biggest difference between human brains and machines is that computers are millions of times faster than the human brain, but the human brain still has the advantage in terms of storage capacity and interconnections. This advantage is slowly being closed with advances in computer hardware and more sophisticated software, but there’s still a big challenge to overcome as are still not aware of how to use computer resources to achieve the brain’s level of intelligence.

Psychology

Psychology can be viewed as the middle point between neuroscience and philosophy. It tries to understand how our specially-configured and developed brain reacts to stimuli and responds to its environment, both of which are important to building an intelligent system. Cognitive psychology views the brain as an information processing device, operating based on beliefs and goals and beliefs, similar to how we would build an intelligence machine of our own.

Many cognitive theories have already been codified to build algorithms that power the chatbots of today.

Computer Engineering

The most obvious application here, but we’ve put this the end to help you understand what all this computer engineering is going to be based on. Computer engineering will translate all our theories and concepts into a machine-readable language so that it can make its computations to produce an output that we can understand. Each advance in computer engineering has opened up more possibilities to build even more powerful Artificial Intelligence systems, that are based on advanced operating systems, programming languages, information management systems, tools, and state-of-the-art hardware.

Control Theory and Cybernetics

To be truly intelligent, a system needs to be able to control and modify its actions to produce the desired output. The desired output in question is defined as an objective function, towards which the system will try to move towards, by continually modifying its actions based on the changes in its environment using mathematical computations and logic to measure and optimise its behaviours.

Linguistics

All thought is based on some language and is the most understandable representation of thoughts. Linguistics has led to the formation of natural language processing, that help machines understand our syntactic language, and also to produce output in a manner that is understandable to almost anyone. Understanding a language is more than just learning how sentences are structured, it also requires a knowledge of the subject matter and context, which has given rise to the knowledge representation branch of linguistics.

Read Also: Top 10 Artificial Intelligence Technologies in 2020

What are the Types of Artificial Intelligence?

Not all types of AI all the above fields simultaneously. Different Artificial Intelligence entities are built for different purposes, and that’s how they vary. AI can be classified based on Type 1 and Type 2 (Based on functionalities). Here’s a brief introduction the first type.

3 Types of Artificial Intelligence

- Artificial Narrow Intelligence (ANI)

- Artificial General Intelligence (AGI)

- Artificial Super Intelligence (ASI)

Let’s take a detailed look.

What is Artificial Narrow Intelligence (ANI)?

This is the most common form of AI that you’d find in the market now. These Artificial Intelligence systems are designed to solve one single problem and would be able to execute a single task really well. By definition, they have narrow capabilities, like recommending a product for an e-commerce user or predicting the weather. This is the only kind of Artificial Intelligence that exists today. They’re able to come close to human functioning in very specific contexts, and even surpass them in many instances, but only excelling in very controlled environments with a limited set of parameters.

What is Artificial General Intelligence (AGI)?

AGI is still a theoretical concept. It’s defined as AI which has a human-level of cognitive function, across a wide variety of domains such as language processing, image processing, computational functioning and reasoning and so on.

We’re still a long way away from building an AGI system. An AGI system would need to comprise of thousands of Artificial Narrow Intelligence systems working in tandem, communicating with each other to mimic human reasoning. Even with the most advanced computing systems and infrastructures, such as Fujitsu’s K or IBM’s Watson, it has taken them 40 minutes to simulate a single second of neuronal activity. This speaks to both the immense complexity and interconnectedness of the human brain, and to the magnitude of the challenge of building an AGI with our current resources.

What is Artificial Super Intelligence (ASI)?

We’re almost entering into science-fiction territory here, but ASI is seen as the logical progression from AGI. An Artificial Super Intelligence (ASI) system would be able to surpass all human capabilities. This would include decision making, taking rational decisions, and even includes things like making better art and building emotional relationships.

Once we achieve Artificial General Intelligence, AI systems would rapidly be able to improve their capabilities and advance into realms that we might not even have dreamed of. While the gap between AGI and ASI would be relatively narrow (some say as little as a nanosecond, because that’s how fast Artificial Intelligence would learn) the long journey ahead of us towards AGI itself makes this seem like a concept that lays far into the future.

Strong and Weak Artificial Intelligence

Extensive research in Artificial Intelligence also divides it into two more categories, namely Strong Artificial Intelligence and Weak Artificial Intelligence. The terms were coined by John Searle in order to differentiate the performance levels in different kinds of AI machines. Here are some of the core differences between them.

| Weak AI | Strong AI |

| It is a narrow application with a limited scope. | It is a wider application with a more vast scope. |

| This application is good at specific tasks. | This application has an incredible human-level intelligence. |

| It uses supervised and unsupervised learning to process data. | It uses clustering and association to process data. |

| Example: Siri, Alexa. | Example: Advanced Robotics |

Type 2 (Based on Functionalities)

Reactive Machines

One of the most basic forms of AI, it doesn’t have any prior memory and doesn’t use past information for future actions. It is one of the oldest forms of AI but possesses limited capability. It doesn’t have any memory-based functionality. They also can’t learn and can automatically respond to a limited set of inputs. This type of AI cannot be relied upon to improve its operations based on memory. A popular example of a reactive AI machine is IBM’s Deep Blue, which is a machine that beat Garry Kasparov, a Grandmaster in chess in 1997.

Limited Memory

AI systems that can make use of experience to influence future decisions are known as Limited memory. Nearly all AI applications come under this category. AI systems are trained with the help of large volumes of data that are stored in their memory as a form of reference for future problems. Let’s take the example of image recognition. AI is trained with the help of thousands of pictures and their labels to teach it. Now, when the image is scanned, it will make use of the training images as a reference and understand the content of the image presented to it based on “learning experience”. It’s accuracy increases over time.

Theory of mind

This type of AI is just a concept or a work in progress and would require some amount of improvements before it is complete. It is being researched currently and will be used to better understand people’s emotions, needs, beliefs, and thoughts. Artificial emotional intelligence is a budding industry and an area of interest but reaching this level of understanding will require time and effort. To truly understand human needs, the AI Machine would have to perceive humans as individuals whose mind is shaped by multiple factors.

Self-awareness

A type of AI that has it’s own conscious, super-intelligent, and is self-aware. This type of AI also doesn’t exist yet, but if achieved will be one of the greatest milestones achieved in the field of Artificial Intelligence. It can be considered as the final stage of development and exists only hypothetically. Self-aware AI would be so evolved, it will be able to akin to the human brain. It can be extremely dangerous to create a level of AI that is advanced to this level because it can possess ideas and thoughts of its own and could easily outmanoeuvre the intellect of human beings.

Reasoning in AI

Reasoning is defined as the process of driving logical conclusions and predictions based on the knowledge, facts, and beliefs that are available. It is a general process of thinking rationally to draw insights and conclusions from the data at hand. It is essential and crucial in artificial intelligence so that machines can learn and think rationally such as the human brain. Developing reasoning within AI leads to the human-like performance of the machine.

The different types of reasoning in AI are:

- Common Sense Reasoning

- Deductive reasoning

- Inductive reasoning

- Abductive reasoning

- Non-monotonic Reasoning

- Monotonic Reasoning

What is the Purpose of Artificial Intelligence?

The purpose of Artificial Intelligence is to aid human capabilities and help us make advanced decisions with far-reaching consequences. That’s the answer from a technical standpoint. From a philosophical perspective, Artificial Intelligence has the potential to help humans live more meaningful lives devoid of hard labour, and help manage the complex web of interconnected individuals, companies, states and nations to function in a manner that’s beneficial to all of humanity.

Currently, the purpose of Artificial Intelligence is shared by all the different tools and techniques that we’ve invented over the past thousand years – to simplify human effort, and to help us make better decisions. Artificial Intelligence has also been touted as our Final Invention, a creation that would invent ground-breaking tools and services that would exponentially change how we lead our lives, by hopefully removing strife, inequality and human suffering.

That’s all in the far future though – we’re still a long way from those kinds of outcomes. Currently, Artificial Intelligence is being used mostly by companies to improve their process efficiencies, automate resource-heavy tasks, and to make business predictions based on hard data rather than gut feelings. As all technology that has come before this, the research and development costs need to be subsidised by corporations and government agencies before it becomes accessible to everyday laymen.

Where is Artificial Intelligence (AI) Used?

AI is used in different domains to give insights into user behaviour and give recommendations based on the data. For example, Google’s predictive search algorithm used past user data to predict what a user would type next in the search bar. Netflix uses past user data to recommend what movie a user might want to see next, making the user hooked onto the platform and increase watch time. Facebook uses past data of the users to automatically give suggestions to tag your friends, based on their facial features in their images. AI is used everywhere by large organisations to make an end user’s life simpler. The uses of Artificial Intelligence would broadly fall under the data processing category, which would include the following:

- Searching within data, and optimising the search to give the most relevant results

- Logic-chains for if-then reasoning, that can be applied to execute a string of commands based on parameters

- Pattern-detection to identify significant patterns in large data set for unique insights

- Applied probabilistic models for predicting future outcomes

What are the Advantages of Artificial Intelligence?

There’s no doubt in the fact that technology has made our life better. From music recommendations, map directions, mobile banking to fraud prevention, AI and other technologies have taken over. There’s a fine line between advancement and destruction. There’s always two sides to a coin, and that is the case with AI as well. Let us take a look at some advantages of Artificial Intelligence-

Advantages of Artificial Intelligence (AI)

- Reduction in human error

- Available 24×7

- Helps in repetitive work

- Digital assistance

- Faster decisions

- Rational Decision Maker

- Medical applications

- Improves Security

- Efficient Communication

Let’s take a closer look

Reduction in human error

In an Artificial intelligence model, all decisions are taken from the previously gathered information after having applied a certain set of algorithms. Hence, errors are reduced and the chances of accuracy only increase with a greater degree of precision. In case of humans performing any task, there’s always a chance of error. We aren’t powered by algorithms and programs and thus, AI can be used to avoid such human error.

Available 24×7

While an average human works 6-8 hours a day, AI manages to make machines work 24×7 without any breaks or boredom. As one might know, humans do not have the capability to work for a long period, our body requires rest. An AI-powered system won’t require any breaks in between and is best used for tasks that need 24/7 concentration.

Helps in repetitive work

AI can productively automate mundane human tasks and free them up to be increasingly creative – right from sending a thank you mail or verifying documents to decluttering or answering queries. A repetitive task such a making food in a restaurant or in a factory can be messed up because humans are tired or uninterested for a long time. Such tasks can easily be performed efficiently with the help of AI.

Digital assistance

Many of the highly advanced organizations use digital assistants to interact with users in order to save human resources. These digital assistants are also used in many websites to answer user queries and provide a smooth functioning interface. Chatbots are a great example of the same. Read here to know more about how to build an AI Chatbot.

Faster decisions

AI, alongside other technologies, can make machines take decisions faster than an average human to carry out actions quicker. This is because while making a decision, humans tend to analyze many factors both emotionally and practically as opposed to AI-powered machines that deliver programmed results quickly.

Rational Decision Maker

We as humans may have evolved to a great extent technologically, but when it comes to decision making, we still allow our emotions to take over. In certain situations, it becomes important to take quick, efficient and logical decisions without letting our emotions control the way we think. AI powered decision making will be controlled with the help of algorithms and thus, there is no scope for emotional decision making. This ensures that efficiency will not be affected and increases productivity.

Medical applications

One of the biggest advantages of Artificial Intelligence is its use in the medical industry. Doctors are now able to assess their patients health risks with the help of medical applications built for AI. Radiosurgery is being used to operate on tumors in such a way that it won’t damage surrounding tissues and cause any further damage. Medical professionals have been trained to use AI for surgery. They can also help in efficiently detecting and monitoring various neurological disorders and stimulate the brain functions.

Improves Security

With advancement in technology, there are chances of it being used for the wrong reasons such as fraud and identity theft. But if used in the right manner, AI can be very helpful in keeping our security intact. It is being developed to help protect our life and property. One major area where we can already see the implementation of AI in security is Cybersecurity. AI has completely transformed the way we are able to secure ourselves against any cyber-threats.

Read further to know about AI in Cybersecurity and how it helps, here.

Efficient Communication

When we look at life just a couple of years ago, people who didn’t speak the same language weren’t able to communicate with each other without the help of a human translator who could understand and speak both languages. With the help of AI, such a problem does not exist. Natural Language Processing or NLP allows systems to translate words from one language to another, eliminating a middleman. Google translate has advanced to a great extent and even provides an audio example of how a word/sentence in another language must be pronounced.

What are the disadvantages of Artificial Intelligence?

Disadvantages of Artificial Intelligence (AI)

- Cost overruns

- Dearth of talent

- Lack of practical products

- Lack of standards in software development

- Potential for misuse

- Highly dependent on machines

- Requires Supervision

Let’s take a closer look at them

Cost overruns

What separates AI from normal software development is the scale at which they operate. As a result of this scale, the computing resources required would exponentially increase, pushing up the cost of the operation, which brings us to the next point.

Dearth of talent

Since it’s still a fairly nascent field, there’s a lack of experienced professionals, and the best ones are quickly snapped up by corporations and research institutes. This increases the talent cost, which further drives up Artificial Intelligence implementation prices.

Lack of practical products

For all the hype that’s been surrounding AI, it doesn’t seem to have a lot to show for it. Granted that applications such as chatbots and recommendation engines do exist, but the applications don’t seem to extend beyond that. This makes it difficult to make a case for pouring in more money to improve AI capabilities.

Lack of standards in software development

The true value of Artificial Intelligence lays in collaboration when different AI systems come together to form a bigger, more valuable application. But a lack of standards in AI software development means that it’s difficult for different systems to ‘talk’ to each other. Artificial Intelligence software development itself is slow and expensive because of this, which further acts as an impediment to AI development.

Potential for Misuse

The power of Artificial Intelligence is massive, and it has the potential to achieve great things. Unfortunately, it also has the potential to be misused. Artificial Intelligence by itself is a neutral tool that can be used for anything, but if it falls into the wrong hands, it would have serious repercussions. In this nascent stage where the ramifications of AI developments are still not completely understood, the potential for misuse might be even higher.

Highly dependent on machines

Most people are already highly dependent on applications such as Siri and Alexa. By receiving constant assistance from machines and applications, we are losing our ability to think creatively. By becoming completely dependent on machines, we are losing out on learning simple life skills, becoming lazier, and raising a generation of highly-dependent individuals.

Requires Supervision

Algorithms function perfectly well, they are efficient and will perform the task as programmed. However, the drawback is that we would still have to continually supervise the functioning. Although the task is performed by machines, we need to ensure that mistakes are not being made. One example of why supervision is required is Microsoft’s AI chat-bot named ‘Tay’. The chat-bot was modelled to speak like a teenage girl by learning through online conversations. The chat-bot went from learning basic conversational skills to tweeting highly political and incorrect information because of internet trolls.

Prerequisites for Artificial Intelligence?

As a beginner, here are some of the basic prerequisites that will help get started with the subject.

- A strong hold on Mathematics – namely Calculus, Statistics and probability.

- A good amount of experience in programming languages like Java, or Python.

- A strong hold in understanding and writing algorithms.

- A strong background in data analytics skills.

- A good amount of knowledge in discrete mathematics.

- The will to learn machine learning languages.

Applications of Artificial Intelligence in business?

AI truly has the potential to transform many industries, with a wide range of possible use cases. What all these different industries and use cases have in common, is that they are all data-driven. Since Artificial Intelligence is an efficient data processing system at its core, there’s a lot of potential for optimisation everywhere.

Let’s take a look at the industries where AI is currently shining.

Healthcare:

- Administration: AI systems are helping with the routine, day-to-day administrative tasks to minimise human errors and maximise efficiency. Transcriptions of medical notes through NLP and helps structure patient information to make it easier for doctors to read it.

- Telemedicine: For non-emergency situations, patients can reach out to a hospital’s AI system to analyse their symptoms, input their vital signs and assess if there’s a need for medical attention. This reduces the workload of medical professionals by bringing only crucial cases to them.

- Assisted Diagnosis: Through computer vision and convolutional neural networks, AI is now capable of reading MRI scans to check for tumours and other malignant growths, at an exponentially faster pace than radiologists can, with a considerably lower margin of error.

- Robot-assisted surgery: Robotic surgeries have a very minuscule margin-of-error and can consistently perform surgeries round-the-clock without getting exhausted. Since they operate with such a high degree of accuracy, they are less invasive than traditional methods, which potentially reduces the time patients spend in the hospital recovering.

- Vital Stats Monitoring: A person’s state of health is an ongoing process, depending on the varying levels of their respective vitals stats. With wearable devices achieving mass-market popularity now, this data is not available on tap, just waiting to be analysed to deliver actionable insights. Since vital signs have the potential to predict health fluctuations even before the patient is aware, there are a lot of live-saving applications here.

E-commerce

- Better recommendations: This is usually the first example that people give when asked about business applications of AI, and that’s because it’s an area where AI has delivered great results already. Most large e-commerce players have incorporated Artificial Intelligence to make product recommendations that users might be interested in, which has led to considerable increases in their bottom-lines.

- Chatbots: Another famous example, based on the proliferation of Artificial Intelligence chatbots across industries, and every other website we seem to visit. These chatbots are now serving customers in odd-hours and peak hours as well, removing the bottleneck of limited human resources.

- Filtering spam and fake reviews: Due to the high volume of reviews that sites like Amazon receive, it would be impossible for human eyes to scan through them to filter out malicious content. Through the power of NLP, Artificial Intelligence can scan these reviews for suspicious activities and filter them out, making for a better buyer experience.

- Optimising search: All of the e-commerce depends upon users searching for what they want, and being able to find it. Artificial Intelligence has been optimising search results based on thousands of parameters to ensure that users find the exact product that they are looking for.

- Supply-chain: AI is being used to predict demand for different products in different timeframes so that they can manage their stocks to meet the demand.

Human Resources

- Building work culture: AI is being used to analyse employee data and place them in the right teams, assign projects based on their competencies, collect feedback about the workplace, and even try to predict if they’re on the verge of quitting their company.

- Hiring: With NLP, AI can go through thousands of CV in a matter of seconds, and ascertain if there’s a good fit. This is beneficial because it would be devoid of any human errors or biases, and would considerably reduce the length of hiring cycles.

Robots in AI

The field of robotics has been advancing even before AI became a reality. At this stage, artificial intelligence is helping robotics to innovate faster with efficient robots. Robots in AI have found applications across verticals and industries especially in the manufacturing and packaging industries. Here are a few applications of robots in AI:

Assembly

- AI along with advanced vision systems can help in real-time course correction

- It also helps robots to learn which path is best for a certain process while its in operation

Customer Service

- AI-enabled robots are being used in a customer service capacity in retail and hospitality industries

- These robots leverage Natural Language Processing to interact with customers intelligently and like a human

- More these systems interact with humans, more they learn with the help of machine learning

Packaging

- AI enables quicker, cheaper, and more accurate packaging

- It helps in saving certain motions that a robot is making and constantly refines them, making installing and moving robotic systems easily

Open Source Robotics

- Robotic systems today are being sold as open-source systems having AI capabilities.

- In this way, users can teach robots to perform custom tasks based on a specific application

- Eg: small scale agriculture

Top Used Applications in Artificial Intelligence

- Google’s AI-powered predictions (E.g.: Google Maps)

- Ride-sharing applications (E.g.: Uber, Lyft)

- AI Autopilot in Commercial Flights

- Spam filters on E-mails

- Plagiarism checkers and tools

- Facial Recognition

- Search recommendations

- Voice-to-text features

- Smart personal assistants (E.g.: Siri, Alexa)

- Fraud protection and prevention.

Artificial Intelligence Jobs

According to Indeed, demand for AI skills has more than doubled over the last three years. Job postings have gone up by 119%. Today, training an image-processing algorithm can be done within minutes, earlier the same task used to take hours. Compared to the number of job postings available there is a shortage of skilled labor available with the necessary skills. A few skills that one must learn before deep diving into an AI Career are Bayesian Networking and Neural Nets, Computer Science (coding experience with programming languages), Physics, Robotics, and various levels of math such as calculus and statistics. If you’re interested in building a career in AI, you should be aware of the various job roles available in this field.

Let’s take a closer look at the different job roles in the world of AI and what skills one must possess for each job role-

Machine Learning Engineer

The role of a Machine Learning Engineer is suitable for someone who hails from a Data Science or applied research background. S/he must also be able to demonstrate a thorough understanding of multiple programming languages. S/he Should be able to apply predictive models and leverage NLP when working with enormous datasets. Familiarity with software development IDE tools such as Eclipse and IntelliJ is important too. Machine Learning Engineers are mostly responsible for building and managing platforms for various ML projects. The annual median salary of an ML Engineer is said to be $114,856. Companies typically hire individuals who have a master’s degree and in-depth knowledge about Java, Python, and Scala. Skill requirements may vary from one company to another, but analytical skills and experience with cloud applications is a plus.

Data Scientist

Collecting, analyzing, and interpreting large & complex datasets by leveraging ML and predictive analytics is one of the main tasks of a Data Scientist. Data Scientists also help in developing algorithms that enable collecting and cleaning data for analysis. The annual median salary of a Data Scientist is $120,931 and the skills required are as follows-

- Hive

- Hadoop

- MapReduce

- Pig

- Spark

- Python

- Scala

- SQL

Although the skills required may vary from one company to another, most companies require a master’s or doctoral degree in computer science. For a Data Scientist who wants to become an AI developer, an advanced computer science degree would triumph. Apart from the ability to understand unstructured data, s/he must have strong analytical and communication skills to help communicate findings with business leaders.

Business Intelligence Developer

A career in AI also includes the position of Business Intelligence (BI) developer. One of the main objectives of this role is to analyze complex datasets that will help identify business and market trends. A BI developer earns an annual median salary of $92,278. Some of the responsibilities of a BI developer include designing, modeling, and maintaining complex data in cloud-based data platforms. If you’re interested in this role, you must have strong technical as well as analytical skills. You should be able to communicate solutions to colleagues who don’t possess technical knowledge and display problem-solving skills. A BI developer is required to have a bachelor’s degree in any related field. Work experience or certifications are highly desired as well.

Skills required would be data mining, SQL queries, SQL server reporting services, BI technologies, and data warehouse design.

Research Scientist

A research scientist is one of the leading careers in Artificial Intelligence. S/he should be an expert in multiple disciplines such as applied mathematics, deep learning, machine learning, and computational statistics. Candidates must have extensive knowledge concerning computer perception, graphical models, reinforcement learning, and NLP.

Similar to Data Scientists, a research scientist is expected to have a master’s or doctoral degree in computer science. The annual median salary is said to be $99,809.

Most companies are on the lookout for someone who has an in-depth understanding of parallel computing, distributed computing, benchmarking and machine learning.

Big Data Engineer/Architect

Among the various jobs under the field of AI, Big Data Engineer/Architects have the best-paying job with an annual median salary of $151,307. They play a vital role in the development of an ecosystem that enables business systems to communicate with each other and collate data. When compared to Data Scientists, Big data Architects or Engineers are typically given tasks related to planning, designing, and developing the big data environment on Spark and Hadoop.

Companies look to hire individuals who demonstrate experience in C++, Java, Python, and Scala. Data mining, data visualization, and data migration skills are an advantage. A Ph.D. in mathematics or any related computer science field would be a bonus.

Career Trends in Artificial Intelligence

Jobs in AI have been steadily increasing over the past few years and will continue growing at an accelerating rate. 57% of Indian companies are looking forward to hiring the right talent to match up the Market Sentiment. On average, there has been a 60-70% hike in salaries of aspirants who have successfully transitioned into AI roles. Mumbai stays tall in the competition followed by Bangalore and Chennai. As per research, the demand for AI Jobs have increased but efficient workforce has not been keeping pace with it. As per WEF, 133 million jobs would be created in Artificial Intelligence by the year 2020.

What is Machine Learning?

Machine learning is a subset of artificial intelligence (AI) which defines one of the core tenets of Artificial Intelligence – the ability to learn from experience, rather than just instructions.

Machine Learning algorithms automatically learn and improve by learning from their output. They do not need explicit instructions to produce the desired output. They learn by observing their accessible data sets and compares it with examples of the final output. The examine the final output for any recognisable patterns and would try to reverse-engineer the facets to produce an output.

Artificial Intelligence vs Machine Learning

AI and ML are often used interchangeably, and although Machine Learning is a subset of AI, there are a few differences between the two. For your reference, a few differences have been listed below.

| Artificial Intelligence | Machine Learning |

| AI aims to make a smart computer system like humans to solve complex problems. | ML allows machines to learn from data so that they can give accurate output. |

| Mainly deals with structured, semi-structured, and unstructured data. | ML deals with structured and semi-structured data. |

| Based on capability, AI can be divided into three types. Weak AI, General AI, and Strong AI. | ML is also divided into 3 types- Supervised learning, Unsupervised learning, and Reinforcement learning. |

| AI systems are concerned with maximizing the chances of success. | Machine learning is mainly concerned with accuracy and patterns. |

| AI works towards creating an intelligent system which can perform various complex tasks. | ML allows machines to learn from data so that they can give an accurate output. |

| AI enables a machine to simulate human behavior. | Machine learning is a subset of AI. It allows a machine to automatically learn from past data without programming explicitly. |

| Applications of AI are Siri, customer support using catboats, Expert System, Online game playing, intelligent humanoid robot, etc. | Applications of ML are Online recommender system, Google search algorithms, Facebook auto friend tagging suggestions, etc. |

What are the different kinds of Machine Learning?

The Types of Machine Learning are

- Supervised Learning

- Unsupervised Learning

- Semi-supervised learning

- Reinforcement Learning

Also Read: Advantages of pursuing a career in Machine Learning

What is Supervised Learning?

Supervised Machine Learning applies what it has learnt based on past data, and applies it to produce the desired output. They are usually trained with a specific dataset based on which the algorithm would produce an inferred function. It uses this inferred function to predict the final output and delivers an approximation of it.

This is called supervised learning because the algorithm needs to be taught with a specific dataset to help it form the inferred function. The data set is clearly labelled to help the algorithm ‘understand’ the data better. The algorithm can compare its output with the labelled output to modify its model to be more accurate.

What is Unsupervised Learning?

With unsupervised learning, the training data is still provided but it would not be labelled. In this model, the algorithm uses the training data to make inferences based on the attributes of the training data by exploring the data to find any patterns or inferences. It forms its logic for describing these patterns and bases its output on this.

What is Semi-supervised Learning?

This is similar to the above two, with the only difference being that it uses a combination of both labelled and unlabelled data. This solves the problem of having to label large data sets – the programmer can just label and a small subset of the data and let the machine figure the rest out based on this. This method is usually used when labelling the data sets is not feasible, either due to large volumes of a lack of skilled resources to label it.

Also Read: Top 9 Artificial Intelligence Startups in India

Here is a short video explaining the various types of Machine Learning:

What is Reinforcement Learning?

Reinforcement learning is dependent on the algorithms environment. The algorithm learns by interacting with it the data sets it has access to, and through a trial and error process tries to discover ‘rewards’ and ‘penalties’ that are set by the programmer. The algorithm tends to move towards maximising these rewards, which in turn provide the desired output. It’s called reinforcement learning because the algorithm receives reinforcement that it is on the right path based on the rewards that it encounters. The reward feedback helps the system model its future behaviour.

What is Deep Learning?

Deep Learning is a subfield of machine learning concerned with algorithms inspired by the structure and function of the brain called artificial neural networks. Deep Learning concepts are used to teach machines what comes naturally to us humans. Using Deep Learning, a computer model can be taught to run classification acts taking image, text, or sound as an input.

Deep Learning is becoming popular as the models are capable of achieving state of the art accuracy. Large labelled data sets are used to train these models along with the neural network architectures.

Simply put, Deep Learning is using brain simulations hoping to make learning algorithms efficient and simpler to use. Let us now see what is the difference between Deep Learning and Machine Learning.

Deep Learning vs. Machine Learning

How is Deep Learning Used: Applications

Deep Learning applications have started to surface but have a much greater scope for the future. Listed here are some of the deep learning applications that will rule the future.

- Adding image and video elements – Deep learning algorithms are being developed to add colour to the black and white images. Also, automatically adding sounds to movies and video clips.

- Automatic Machine Translations – Automatically translating text into other languages or translating images to text. Though automatic machine translations have been around for some time, deep learning is achieving top results.

- Object Classification and Detection – This technology helps in applications like face detection for attendance systems in schools, or spotting criminals through surveillance cameras. Object classification and detection are achieved by using very large convolutional neural networks and have use-cases in many industries.

- Automatic Text Generation – A large corpus of text is learnt by the machine learning algorithm and this text is used to write new text. The model is highly productive in generating meaningful text and can even map the tonality of the corpus in the output text.

- Self-Driving cars – A lot has been said and heard about self-driving cars and is probably the most popular application of deep learning. Here the model needs to learn from a large set of data to understand all key parts of driving, hence deep learning algorithms are used to improve performance as more and more input data is fed.

- Applications in Healthcare – Deep Learning shows promising results in detecting chronic illnesses such as breast cancer and skin cancer. It also has a great scope in mobile and monitoring apps, and prediction and personalised medicine.

Why is Deep Learning important?

Today we can teach machines how to read, write, see, and hear by pushing enough data into learning models and make these machines respond the way humans do, or even better. Access to unlimited computational power backed by the availability of a large amount of data generated through smartphones and the internet has made it possible to employ deep learning applications into real-life problems.

This is the time for deep learning explosion and tech leaders like Google are already applying it anywhere and everywhere possible.

The performance of a deep learning model improves with an increase in the amount of input data as compared to Machine Learning models, where performance tends to decline with an increase in the amount of input data.

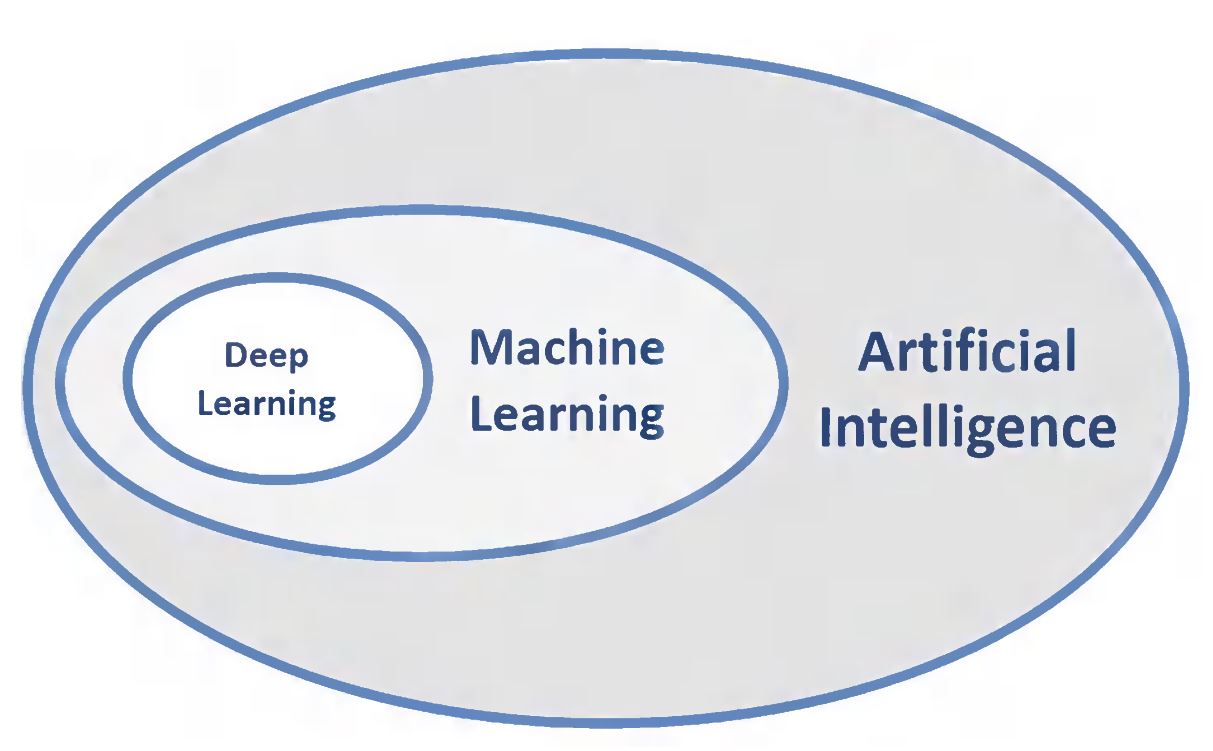

What is the relationship between AI, ML, and DL?

As the above image portrays, the three concentric ovals describe DL as a subset of ML, which is also another subset of AI. Therefore, AI is the all-encompassing concept that initially erupted. It was then followed by ML that thrived later, and lastly DL that is now promising to escalate the advances of AI to another level.

What is NLP?

A component of Artificial Intelligence, Natural Language Processing is the ability of a machine to understand the human language as it is spoken. The objective of NLP is to understand and decipher the human language to ultimately present with a result. Most of the NLP techniques use machine learning to draw insights from human language.

Also Read: Most Promising Roles for Artificial Intelligence in India

What are the different steps involved in NLP?

The steps involved in implementing NLP are:

- The computer program collects all the data required. This includes database files, spreadsheets, email communication chains, recorded phone conversations, notes, and all other relevant data.

- An algorithm is employed to remove all the stop words from this data and normalizes certain words which have the same meaning.

- The remaining text is divided into groups known as tokens.

- The NLP program analyzes results to spot deduce patterns, their frequency and other statistics to understand the usage of tokens and their applicability.

Where is NLP used?

Some of the common applications that are being driven by Natural Language Processing are:

- Language translation application

- Word processors to check grammatical accuracy of the text

- Call centres use Interactive Voice Response to respond to user requests; IVR is an application NLP

- Personal virtual assistants such as Siri and Cortana are a classic example of NLP

What is Python?

Python is a popular object-oriented programming language that was created by Guido Van Rossum and released in the year 1991. It is one of the most widely-used programming languages for web development, software development, system scripting, and many other applications.

Why is Python so popular?

There are many reasons behind the popularity of Python as the much-preferred programming language, i.e.,

- The easy to learn syntax helps with improved readability and hence the reduced cost of program maintenance

- It supports modules and packages to encourage code re-use

- It enables increased productivity as there is no compilation step making the edit-test-debug cycle incredibly faster

- Debugging in Python is much easier as compared to other programming languages

Also Read: Top Interview Questions for Python

Where is Python used?

Python is used in many real-world applications such as:

- Web and Internet Development

- Applications in Desktop GUI

- Science and Numeric Applications

- Software Development Applications

- Applications in Business

- Applications in Education

- Database Access

- Games and 3D Graphics

- Network Programming

How can I learn Python?

There is a lot of content online in the form of videos, blogs, and e-books to learn Python. You can extract as much information as you can through the online material as you can and want. But, if you want more practical learning in a guided format, you can sign up for Python courses provided by many ed-tech companies and learn Python along with hands-on learning through projects from an expert which would be your mentor. There are many offline classroom courses available too. Great Learning’s Artificial Intelligence and Machine Learning course have an elaborate module on Python which is delivered along with projects and lab sessions.

Here is an elaborate Python tutorial for beginners to get started with.

What is Computer Vision?

Computer Vision is a field of study where techniques are developed enabling computers to ‘see’ and understand the digital images and videos. The goal of computer vision is to draw inferences from visual sources and apply it towards solving a real-world problem.

What is Computer Vision used for?

There are many applications of Computer Vision today, and the future holds an immense scope.

- Facial Recognition for surveillance and security systems

- Retail stores also use computer vision for tracking inventory and customers

- Autonomous Vehicles

- Computer Vision in medicine is used for diagnosing diseases

- Financial Institutions use computer vision to prevent fraud, allow mobile deposits, and display information visually

What is Deep Learning Computer Vision

Following are the uses of deep learning for computer vision:

- Object Classification and Localisation: It involves identifying the objects of specific classes of images or videos along with their location highlighted usually with a square box around them.

- Semantic Segmentation: It involves neural networks to classify and locate all the pixels in an image or video.

- Colourisation: Converting greyscale images to full-colour images.

- Reconstructing Images: Reconstructing corrupted and tampered images.

What are Neural Networks?

Neural Network is a series of algorithms that mimic the functioning of the human brain to determine the underlying relationships and patterns in a set of data.

Also Read: A Peek into Global Artificial Intelligence Strategies

What are Neural Networks used for?

The concept of Neural Networks has found application in developing trading systems for the finance sector. They also assist in the development of processes such as time-series forecasting, security classification, and credit risk modelling.

What are the different Neural Networks?

Here are the 6 Different types of Neural Networks

- Feedforward Neural Network: Artificial Neuron: Here data inputs travel in just one direction, going in from the input node and exiting on the output node.

- Radial basis function Neural Network: For their functioning, the radial basis function neural network consider the distance between a point from the centre.

- Kohonen Self Organizing Neural Network: The objective here is to input vectors of arbitrary dimension to discrete map comprised of neurons.

- Recurrent Neural Network(RNN): The Recurrent Neural Network saves the output of a layer and feeds it back to the input for helping in predicting the output of the layer.

- Convolutional Neural Network: It is similar to the feed-forward neural networks with the neurons having learn-able biases and weights. It is applied in signal and image processing.

- Modular Neural Networks: It is a collection of many different neural networks, each processing a sub-task. Each of them has a unique set of inputs as compared to other neural networks contributing towards the output.

What are the benefits of Neural Networks?

The three key benefits of Neural Networks are:

- The ability to learn and model non-linear and complex relationships

- ANNs can generalize models infer unseen relationships on unseen data as well

- ANN does not impose any restrictions on the input variables.

Artificial Intelligence Movies

Many movies have been made over the years based on the concepts resonating with Artificial Intelligence. These movies give a glimpse of what the real world could look like in the future. Often, some of these movies and the AI characters and elements in them are inspired by real-life events. Other times, they are elements of imagination which could inspire someone to replicate them into real life. Hence, Artificial Intelligence movies are not just a work of fiction and have much more to them than entertainment. For Artificial Intelligence enthusiasts, they are a source of motivation, inspiration, and sometimes knowledge. They broaden the scope of Artificial Intelligence and push the boundaries of human capability and imagination when it comes to applying Artificial Intelligence to real-world problems.

Artificial Intelligence Course

We do have an interesting video for you if you want to learn the basics of AI. Do watch this video for learning AI.

Future of Artificial Intelligence

As humans, we have always been fascinated by technological changes and fiction, right now, we are living amidst the greatest advancements in our history. Artificial Intelligence has emerged to be the next big thing in the field of technology. Organizations across the world are coming up with breakthrough innovations in artificial intelligence and machine learning. Artificial intelligence is not only impacting the future of every industry and every human being but has also acted as the main driver of emerging technologies like big data, robotics and IoT. Considering its growth rate, it will continue to act as a technological innovator for the foreseeable future. Hence, there are immense opportunities for trained and certified professionals to enter a rewarding career. As these technologies continue to grow, they will have more and more impact on the social setting and quality of life.

Getting certified in AI will give you an edge over the other aspirants in this industry. With advancements such as Facial Recognition, AI in Healthcare, Chat-bots, and more, now is the time to build a path to a successful career in Artificial Intelligence. Virtual assistants have already made their way into everyday life, helping us save time and energy. Self-driving cars by Tech giants like Tesla have already shown us the first step to the future. AI can help reduce and predict the risks of climate change, allowing us to make a difference before it’s too late. And all of these advancements are only the beginning, there’s so much more to come. 133 million new Artificial Intelligence jobs are said to be created by Artificial Intelligence by the year 2022.

Important FAQs on Artificial Intelligence (AI)

Ques. Where is AI used?

Ans. Artificial Intelligence is used across industries globally. Some of the industries which have delved deep in the field of AI to find new applications are E-commerce, Retail, Security and Surveillance. Sports Analytics, Manufacturing and Production, Automotive among others.

Ques. How is AI helping in our life?

Ans. The virtual digital assistants have changed the way w do our daily tasks. Alexa and Siri have become like real humans we interact with each day for our every small and big need. The natural language abilities and the ability to learn themselves without human interference are the reasons they are developing so fast and becoming just like humans in their interaction only more intelligent and faster.

Ques. Is Alexa an AI?

Ans. Yes, Alexa is an Artificial Intelligence that lives among us.

Ques. Is Siri an AI?

Ans. Yes, just like Alexa Siri is also an artificial intelligence that uses advanced machine learning technologies to function.

Ques. Why is AI needed?

Ans. AI makes every process better, faster, and more accurate. It has some very crucial applications too such as identifying and predicting fraudulent transactions, faster and accurate credit scoring, and automating manually intense data management practices. Artificial Intelligence improves the existing process across industries and applications and also helps in developing new solutions to problems that are overwhelming to deal with manually.

What’s your view about the future of Artificial Intelligence? Leave your comments below.

Curious to dig deeper into AI, read our blog on some of the top Artificial Intelligence books.

Further Reading

- Machine learning Tutorial

- Where Will The Artificial Intelligence vs Human Intelligence Race Take Us?

- Natural Language Processing

- Deep learning for computer vision

“KickStart your Artificial Intelligence Journey with Great Learning which offers high-rated Artificial Intelligence courses with world-class training by industry leaders. Whether you’re interested in machine learning, data mining, or data analysis, Great Learning has a course for you!”

[ad_2]

Source link